Why Instruction Sets No Longer Matter

Why Instruction Sets No Longer Matter

About the Author

* I'm a student in the History of Computing class at San Jose State University * (http://www.cs.sjsu.edu/~mak/CS185C/). This is a work in progress that will * turn into a final article by the end of the semester. * * I welcome your comments and advice! *

Summary

Background: Microcode

Most people today are probably more familiar with "firmware" than "microcode." Originally, when Aschler Opler coined the term in 1967, both referred to pretty much the same thing--the contents of the writable control store that defined the instuction set of a given computer[1]. Later, the definition of "firmware" would be expanded to it's current understanding of any microcode that exist, anywhere on the chip, for any purpose, while "microcode" would retain it's original scope but perhaps being too technical would remain largely restricted to the ivorty towers of computer engineering and thus outside of the public consciousness.

Indeed the use of the over-specialized and semi-archaic term "microcode" can cause confusion even in the modern software engineering and computer science circles--at a recent talk at San Jose State RISC pioneer Dr. Robert Garner spoke at great length about microcode. At the end of his presentation, during the Q&A session, a student asked quite bluntly, "I'm still confused...what is microcode?" In fact, the entire Q&A session mostly revolved around the need for clarification about "microcode." I have no doubt that if Dr. Garner had merely interchanged one word for the other there would have been little confusion among the CS & SE students in attendance about the role of "firmware" in today's computers.

In any event for clarity and accessibility, and having witnessed the result other wise, this article will use the term "firmware", but restrict it's meaning to that originally defined by Opler--ie., to mean "microcode."

Development of Firmware

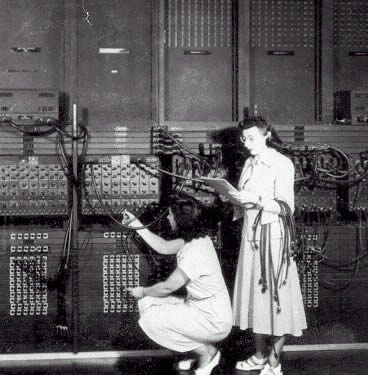

Prior to the advent of firmware, the idea of an instruction set was sort of meaningless. The operation of a given machine's CPU was defined solely by the physical layout of wiring. As there were no stored programs in these early machines reprogramming the machine for a different task was a time consuming operation for early computer programmers. Later, the first stored program machines were developed, but in these early cases the control units of the CPUs were still "hard-wired."

In the late 1951 Maurice Wilkes of Cambridge University proposed what he called "microprogramming." His idea was fundamentally simple--that the CPU could be more generally designed and that it's operations could be defined in a program store in the same way as executable "program" code was. This was an intriguing idea for a variety of reasons, the most important of which was purely economic: such a scenario would significantly reduce development costs.

1.Opler, Ascher (January 1967). "Fourth-Generation Software". Datamation 13 (1): 22–24.