Why Instruction Sets No Longer Matter: Difference between revisions

No edit summary |

|||

| (84 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

==About the Author== | ==About the Author== | ||

My name is Steve Terpe. I'm majoring in Computer Science and will be graduating this fall. Currently I'm taking the History of Computing class at San Jose State University (http://www.cs.sjsu.edu/~mak/CS185C/). | My name is Steve Terpe. I'm majoring in Computer Science and will be graduating this fall. Currently I'm taking the History of Computing class at San Jose State University (http://www.cs.sjsu.edu/~mak/CS185C/). | ||

I welcome your comments and advice and job offers! | I welcome your comments and advice and job offers! | ||

| Line 7: | Line 7: | ||

==Summary== | ==Summary== | ||

This article represents the culmination of a semester's worth of research and discussion with Robert Garner, Peter Capek, and Paul McJones, on the varied topics of von Neumann and non-von Neumann machines, alternative architectures, Turing complete vs. non Turing complete languages, CISC, RISC, PowerPC, and the Cell Processor. | This article represents the culmination of a semester's worth of research and discussion with Robert Garner, Peter Capek, and Paul McJones, on the varied topics of [[John von Neumann|von Neumann]] and non-von Neumann machines, alternative architectures, [[Alan Turing|Turing]] complete vs. non Turing complete languages, CISC, RISC, PowerPC, and the Cell Processor. | ||

Like many historians, I had only a vague idea starting out what this project would finally be about though I knew it should be about alternative architectures in some manner or fashion. As the debate between von Neumann and non-von Neumann is so largely one-sided it can hardly be called a debate at all, I decided to focus on the issue of RISC vs. CISC architectures. | Like many would-be historians, I had only a vague idea starting out what this project would finally be about though I knew it should be about alternative architectures in some manner or fashion. As the debate between von Neumann and non-von Neumann architectures is so largely one-sided it can hardly be called a debate at all, I decided to focus on the issue of RISC vs. CISC architectures. | ||

What I found out in the course of my research, was that now | What I found out in the course of my research, was that now this debate too is largely a non-issue, though it was quite important for a brief period of time. That is great news for computer architecture but not so great news for a would-be historian/researcher of the subject. | ||

In the end, I have decided to explain how we arrived where we are: a place where instruction sets no longer matter. | In the end, I have decided to explain how we arrived where we are: a place where instruction sets no longer matter. | ||

| Line 19: | Line 19: | ||

This article is largely based on interviews I conducted with both Robert Garner and Peter Capek. | This article is largely based on interviews I conducted with both Robert Garner and Peter Capek. | ||

<b>Robert Garner</b> | <b>Robert Garner</b> developed both the Xerox Star and the Sun SPARC 4/200 in his years at Xerox and Sun. He was also involved in many more SPARC projects at Sun. He is currently at IBM's Alameda facility and codeveloped the IceCube brick-based 3d scalable server. He manages the group responsible for the RAID stack for GPFS. He is also currently leading the team that has restored two IBM 1401s for the Computer History Museum. | ||

He was gracious enough to spend several hours with me discussing his thoughts on years of developing both CISC and RISC systems and gave me a tour of IBM's research facility. | He was gracious enough to spend several hours with me discussing his thoughts on years of developing both CISC and RISC systems and gave me a tour of IBM's research facility. | ||

| Line 34: | Line 34: | ||

<b>Paul McJones</b> to whom the final form of this article and project owes a great deal. | <b>Paul McJones</b> to whom the final form of this article and project owes a great deal. | ||

Back in the day, Paul worked with John | Back in the day, Paul worked with John Backus on alternative architectures and languages. He did a lot of other cool stuff as well before finally making his way to Adobe many years later, where he worked on the PixelBender programming language used in Photoshop & After Effects. He also co-authored <i>Elements of Programming</i>. Get it [http://www.elementsofprogramming.com/ here]! | ||

He is currently retired but can sometimes be found at the Computer History Museum. Paul graciously took the time to spend several hours talking to me about his work with John Backus, non-Von Neumann machines, why they were essentially a non-issue, and why my project would be better served by finding some alternative topic. It was great advice. | |||

===A note:=== | ===A note:=== | ||

| Line 47: | Line 49: | ||

=== Background: Microcode === | === Background: Microcode === | ||

Most people today are probably more familiar with "firmware" than "microcode." Originally, when Ascher Opler coined the term in 1967, both referred to pretty much the same thing--the contents of the writable control store that defined the instuction set of a given computer | Most people today are probably more familiar with "firmware" than "microcode." Originally, when Ascher Opler coined the term in 1967, both referred to pretty much the same thing--the contents of the writable control store that defined the instuction set of a given computer<ref name="refnum1">Opler, Ascher (January 1967). "Fourth-Generation Software". Datamation 13 (1): 22–24. </ref>. Later, the definition of "firmware" would be expanded to it's current understanding of any microcode that exist, anywhere on the chip, for any purpose, while "microcode" would retain it's original scope but perhaps being too technical would remain largely restricted to the ivory towers of computer engineering and thus outside of the public consciousness. | ||

Indeed the use of the term "microcode" can apparently cause confusion even in modern software engineering and computer science circles--at a recent talk given at San Jose State University SPARC designer and RISC pioneer Dr. Robert Garner spoke at great length about microcode. At the end of his presentation, during the Q&A session, a student asked quite apologetically, "I'm still confused...what is microcode?" <ref name="refnum2">>Garner, Robert (December 2011). Lecture given at San Jose State University.</ref> | |||

In fact, the entire Q&A session mostly revolved around the need for clarification about "microcode." I think there would have been less confusion among the CS & SE students in attendance if the term "firmware" had been used instead. Most are undoubtedly more familiar with the existence, if not the role, of "firmware" in today's computers. | |||

In any event for clarity and accessibility, and having witnessed the result otherwise, this article will use the term "firmware", but restrict it's meaning to that originally defined by Opler--ie., to mean "microcode." | In any event for clarity and accessibility, and having witnessed the result otherwise, this article will use the term "firmware", but restrict it's meaning to that originally defined by Opler--ie., to mean "microcode." | ||

| Line 61: | Line 65: | ||

In 1951 Maurice Wilkes of Cambridge University proposed what he called "microprogramming." His idea was fundamentally simple--that the CPU could be more generally designed and that it's control unit could be defined in a program store in the same way as executable "program" code was. This microcode would define the instruction set that would be available to the programmer of a particular CPU. Microcode could be added or removed as necessary. Microcode would allow the instruction set of a computer to be altered or edited without a prerequisite change in hardware, wiring, or soldering. This was an intriguing idea for a variety of reasons, the most important of which was purely economic: such a scenario would significantly reduce development costs and give programmers and computer designers much greater flexibility in design. Unfortunately, this was to remain, outside of a few academic attempts, largely theoretical for at least the next decade. | In 1951 Maurice Wilkes of Cambridge University proposed what he called "microprogramming." His idea was fundamentally simple--that the CPU could be more generally designed and that it's control unit could be defined in a program store in the same way as executable "program" code was. This microcode would define the instruction set that would be available to the programmer of a particular CPU. Microcode could be added or removed as necessary. Microcode would allow the instruction set of a computer to be altered or edited without a prerequisite change in hardware, wiring, or soldering. This was an intriguing idea for a variety of reasons, the most important of which was purely economic: such a scenario would significantly reduce development costs and give programmers and computer designers much greater flexibility in design. Unfortunately, this was to remain, outside of a few academic attempts, largely theoretical for at least the next decade. | ||

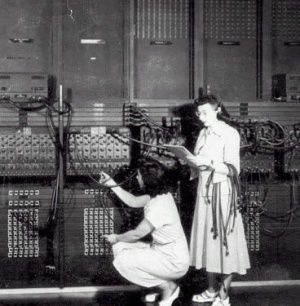

[[Image:ibm_system360.jpg|thumb|right|300px|An IBM System/360 installation circa 1964.<br> The IBM System/360 instruction set was micro-coded firmware (Archival Image, courtesy of IBM).]] | [[Image:ibm_system360.jpg|thumb|right|300px|An IBM System/360 installation circa 1964.<br> The IBM System/360 instruction set was micro-coded firmware (Archival Image, courtesy of IBM).]] | ||

Robert Garner cites IBM's System/360 family of computers as essentially the first | Robert Garner cites IBM's System/360 family of computers as essentially the first successful attempt to commercially develop a CPU with control units defined in firmware.<ref name="refnum2" /> This decision allowed IBM to maintain a great deal of compatibility between the individual models of the System/360 series despite the huge difference in performance and pricing between high-end and low-end system packages. Commercially this was more or less a rout for IBM as customers would tend to migrate from one pricing package to the next rather than purchase a competitor's system when their computing needs changed. This was in order to take advantage of System/360 cross-compatibility--all the programs one had written on 360/30 could run on the 40, the 62, the 65 and so on. | ||

By 1977 when IBM stopped selling the System/360 there had been a total of 14 different models at various price points. Obviously, the outrageous success of the System/360 was in large part due to the cross-compatibility provided by the System/360 firmware as well as the fact that both field repairs and field upgrades on these machines could often be as simple as switching out the control stores--in effect the first of many happy "firmware upgrades." By the end of the 1960's firmware was firmly established as the direction of computer architecture. | By 1977 when IBM stopped selling the System/360 there had been a total of 14 different models at various price points. Obviously, the outrageous success of the System/360 was in large part due to the cross-compatibility provided by the System/360 firmware as well as the fact that both field repairs and field upgrades on these machines could often be as simple as switching out the control stores--in effect the first of many happy "firmware upgrades." By the end of the 1960's firmware was firmly established as the direction of computer architecture. | ||

| Line 71: | Line 75: | ||

In 1975, while working on the IBM 801 and looking at the problem of how to make IBM's firmware less painful from a performance point of view, John Cocke proposed what would essentially become the "Reduced Instruction Set Computer" or "RISC" architecture. | In 1975, while working on the IBM 801 and looking at the problem of how to make IBM's firmware less painful from a performance point of view, John Cocke proposed what would essentially become the "Reduced Instruction Set Computer" or "RISC" architecture. | ||

Cocke and his research team had spent some time analyzing compiled code produced by IBM's System/370 machines (successors to the System/360s) and had noticed the troubling tendency of machine compilers to not necessarily select the best sequence of instructions to achieve some given task. Fundamentally, his idea was that the instruction set that was available to compilers was "too rich" in the sense that it was hard for compilers to choose the best instruction for a give operation. Cocke therefore proposed that the available instruction set be reduced down to "a set of primitives carefully chosen to exploit the fastest component of the storage hierarchy and provide instructions that can be generated easily by compilers." | Cocke and his research team had spent some time analyzing compiled code produced by IBM's System/370 machines (successors to the System/360s) and had noticed the troubling tendency of machine compilers to not necessarily select the best sequence of instructions to achieve some given task. Fundamentally, his idea was that the instruction set that was available to compilers was "too rich" in the sense that it was hard for compilers to choose the best instruction for a give operation. Cocke therefore proposed that the available instruction set be reduced down to "a set of primitives carefully chosen to exploit the fastest component of the storage hierarchy and provide instructions that can be generated easily by compilers"<ref name="refnum3">Cocke, John (January 2000). "The Evolution of RISC Technology at IBM". IBM Journal of Research and Development 44 (1.2).</ref> | ||

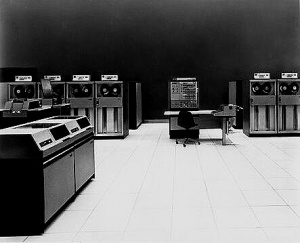

[[Image:ibm_system370.jpg|thumb|right|239px|An IBM System/370 Model 145. | |||

[[Image:ibm_system370.jpg|thumb|right|239px|An IBM System/370 Model 145.]] | |||

A study of the code compiled on these machines gave John Cocke important insights into the development of RISC architecture (Archival Image, courtesy of IBM).]] | |||

It is important to note that despite the ambiguity of language "reduced" does not necessarily imply "less." This is reduced in the cooking sense of "letting something reduce down". This is often a point of confusion about RISC, but it is perfectly acceptable for a RISC machine to have even have <i>more</i> available instructions than a non-RISC machine, provided that those instructions have been boiled down to their most bare-bones primitive operations--i.e., such that each instruction costs very few and, preferably, only one CPU cycle. | It is important to note that despite the ambiguity of language "reduced" does not necessarily imply "less." This is reduced in the cooking sense of "letting something reduce down". This is often a point of confusion about RISC, but it is perfectly acceptable for a RISC machine to have even have <i>more</i> available instructions than a non-RISC machine, provided that those instructions have been boiled down to their most bare-bones primitive operations--i.e., such that each instruction costs very few and, preferably, only one CPU cycle. | ||

Fundamentally, what RISC promised would be a simpler instruction set that would be easier to optimize and pipeline vis-a-vis hard-wiring directly on the chip--such that there would be a symbiosis between instruction set and hardware. This | Fundamentally, what RISC promised would be a simpler instruction set that would be easier to optimize and pipeline vis-a-vis hard-wiring directly on the chip--such that there would be a symbiosis between instruction set and hardware. This became almost a kind of reversal or inversion of what Stanford's William McKeeman called for in 1967, when he suggested that more effort be spent towards "language directed" alternative architectures.<ref name="refnum4">McKeeman, W.M. (November 1967). "Language Directed Computer Design". AFIPS Fall '67 Proceedings of the November 14-16, 1967, Joint Computer Conference.</ref> RISC, as envisioned by Cocke, is an "architecture directed" microcode control language. | ||

Additionally, compiler's would be able to compile code for such a machine that was better, faster, and more efficient because they would, essentially, have a reduced set of choices--and therefore less opportunity to be overwhelmed by instructions that perhaps were really only created and intended for the convenience of human programmers in the first place. | Additionally, compiler's would be able to compile code for such a machine that was better, faster, and more efficient because they would, essentially, have a reduced set of choices--and therefore less opportunity to be overwhelmed by instructions that perhaps were really only created and intended for the convenience of human programmers in the first place. | ||

With the advent of RISC anything that was not RISC came to be called "CISC", that is "Complex Instruction Set Computer" (such as the x86 architecture). And the prevailing meme (especially on the RISC side of the house) was that RISC was "fast" while CISC was "slow." Almost out of the gate, the burning $64,000 question became: which of these competing firmware architectures is actually better? | |||

==What Do These Instruction Sets Look Like?== | ==What Do These Instruction Sets Look Like?== | ||

What is a complex instruction set? What is a reduced instruction set? Let's look at | ===Comparing Instruction Sets=== | ||

Before we answer the question of which type of instruction set is better let's take a closer look at instruction sets: What is a complex instruction set? What is a reduced instruction set? Let's look at some examples. | |||

Consider the ADD instruction: | Consider the ADD instruction: | ||

| Line 102: | Line 113: | ||

accum,immed 4 3 2 1 2-3 | accum,immed 4 3 2 1 2-3 | ||

*Note: "EA" is Effective Address | *Note: "EA" is Effective Address <br> | ||

(Source: Smith, Zack).<ref name="refnum5">Smith, Zack (2005-2011). "The Intel 8086 / 8088/ 80186 / 80286 / 80386 / 80486 Instruction Set." HTML versions of Intel Documents in Public Domain. Source: http://zsmith.co/intel/intel.html M</ref> | |||

We can see here that there are six different possible ADD instructions that can be called in this sample CISC architecture, with the difference largely being the respective location of either destination or source and in some cases huge clock penalties for various arrangements. | We can see here that there are six different possible ADD instructions that can be called in this sample CISC architecture, with the difference largely being the respective location of either destination or source and in some cases huge clock penalties for various arrangements. | ||

| Line 112: | Line 125: | ||

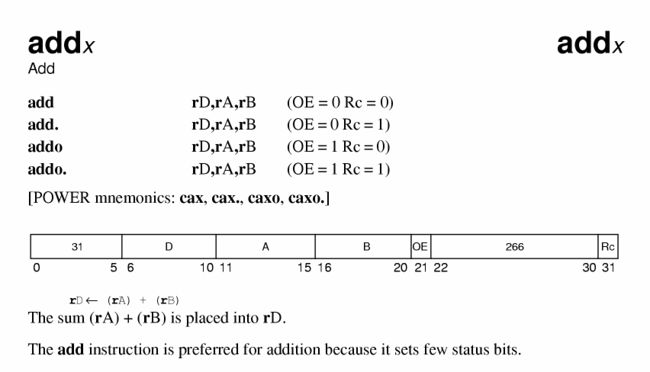

<i>Let us look at the same instruction on the <b>PowerPC (RISC)</b> architecture...</i> | <i>Let us look at the same instruction on the <b>PowerPC (RISC)</b> architecture...</i> | ||

[[Image:ppc_add_instruction_sm.gif|650px]] | [[Image:ppc_add_instruction_sm.gif|650px]] | ||

<i>(Image Source, Delft University of Technology, Netherlands)</i>.<ref name="refnum6">Vakken (1999-2011). "PowerPC Instruction Set." Delft University of Technology, Netherlands. Source: http://pds.twi.tudelft.nl/vakken/in101/labcourse/instruction-set/</ref> | |||

In this architecture we can see that we have some various options regarding flags that we can set here if need be. But for the most part their is only one add operation: A value in one register is added to the value stored in another register and the result is placed into a third register. | |||

The add instruction has been "reduced down" to it's most primitive operation--it does nothing more or less than add. If you want to move the result around later, you need to call a different instruction. This is quite different than the CISC instructions above, that allowed us to merge store operations directly into the add operation. According to RISC theory, these reduced instructions should both individually require less execution cycles and, potentially, might also use slightly less cycles as a program whole. | |||

In any event, because the instructions are broken up into smaller primitives, compilers will have an easier time generating code that will get program execution from A to B as quickly as possible. | |||

===What This Looks Like in Assembly Language=== | |||

Here is assembly code for a simple "Hello World" program, first for ia32, then for ppc32. These code samples are taken from IBM's online developerWorks Library.<ref name="refnum7">Blanchard, Hollis (July, 2002). "PowerPC Assembly: Introduction to assembly on the PowerPC." IBM developerWorks Library. Source: http://www.ibm.com/developerworks/library/l-ppc/</ref> For those who would like to peruse the whole documentation by Hollis Blanchard you can find it [http://www.ibm.com/developerworks/library/l-ppc/ here]. | |||

<i><b>HelloWorld: ia32 assembly</b></i> | |||

<code> | |||

#.data # section declaration | |||

# | |||

#msg: | |||

#.string "Hello, world!\n" | |||

#len = . - msg # length of our dear string | |||

# | |||

#.text # section declaration | |||

# | |||

# # we must export the entry point to the ELF linker or | |||

#.global _start # loader. They conventionally recognize _start as their | |||

# # entry point. Use ld -e foo to override the default. | |||

# | |||

#_start: | |||

# | |||

# # write our string to stdout | |||

# | |||

# movl $len,%edx # third argument: message length | |||

# movl $msg,%ecx # second argument: pointer to message to write | |||

# movl $1,%ebx # first argument: file handle (stdout) | |||

# movl $4,%eax # system call number (sys_write) | |||

# int $0x80 # call kernel | |||

# | |||

# # and exit | |||

# | |||

# movl $0,%ebx # first argument: exit code | |||

# movl $1,%eax # system call number (sys_exit) | |||

# int $0x80 # call kernel | |||

</code> | |||

<br> | |||

<i><b>HelloWorld: ppc32 assembly</b></i> | |||

<code> | |||

#.data # section declaration - variables only | |||

# | |||

#msg: | |||

# .string "Hello, world!\n" | |||

# len = . - msg # length of our dear string | |||

# | |||

#.text # section declaration - begin code | |||

# | |||

# .global _start | |||

#_start: | |||

# | |||

# # write our string to stdout | |||

# | |||

# li 0,4 # syscall number (sys_write) | |||

# li 3,1 # first argument: file descriptor (stdout) | |||

# #second argument: pointer to message to write | |||

# lis 4,msg@ha # load top 16 bits of &msg | |||

# addi 4,4,msg@l # load bottom 16 bits | |||

# li 5,len # third argument: message length | |||

# sc # call kernel | |||

# | |||

# # and exit | |||

# | |||

# li 0,1 # syscall number (sys_exit) | |||

# li 3,1 # first argument: exit code | |||

# sc # call kernel | |||

</code> | |||

<br> | |||

First, I do apologize for the formatting apparently this wiki does not yet fully support the "code" formatting tag. Or I'm doing it wrong. :( | |||

<br> | <br> | ||

<br> | <br> | ||

At any rate, if you're like me you probably noticed that if you were to remove all comments these are really pretty similar in terms of actual lines of code but that, in terms of brevity, ia32 has a slight advantage. The instructions that are writing out the actual string take 6 lines of code on the ppc32 as opposed to five on the ia32. Both load the three arguments, $stdout, $msg, and $len into three registers and then make a system call to the kernel for "sys_write" then exit. | |||

This, of course, is a trivial example and writing out a single string is a simple thing--five lines of code vs. six lines of code may seem like a meaningless distinction. That is correct, but even this trivial example illustrates the point that if one was coding something more complex by hand, with dozens or hundreds of loads, moves, and stores, one would much prefer the instruction set that that was geared toward code brevity, all other things being equal. | |||

That being said, if machine compilers are taking over the task of writing assembly code (as was increasingly the case when John Cocke was studying the issue in the 1970's), then we really are not particularly sympathetic to how many lines of code <i>the compiler</i> has to write so long as it is fast, efficient, and if not better then at least not dumber than the code a human <i>would</i> write. | |||

==Do Instruction Sets Matter?== | ==Do Instruction Sets Matter?== | ||

===Performance Is About Memory Hierarchy, Not Instruction Set=== | ===Performance Is About Memory Hierarchy, Not Instruction Set=== | ||

Having looked briefly at some samples of both the RISC and CISC instruction sets let us return now to the question at hand: which of these competing firmware architectures is actually better? | |||

The answer, it turns out, is neither. | The answer, it turns out, is neither. | ||

For a few years, yes, it seemed like RISC architectures such as SPARC really were delivering on their promise and outperforming their CISC machine contemporaries. But Robert Garner, one of the original designer of the SPARC argues, compellingly, that this is more of a case of faulty correlation. The performance that RISC architectures were achieving was attributed to the nature of the instruction set, but in reality it was something else entirely--the increasing affordability of on-chip memory caches | For a few years, yes, it seemed like RISC architectures such as SPARC really were delivering on their promise and outperforming their CISC machine contemporaries. But Robert Garner, one of the original designer of the SPARC argues, compellingly, that this is more of a case of faulty correlation. The performance that RISC architectures were achieving was attributed to the nature of the instruction set, but in reality it was something else entirely--the increasing affordability of on-chip memory caches which were first implemented on RISC machines.<ref name="refnum8">Garner, Robert (November 2011). From interview discussion.</ref> When queried on the same issue Peter Capek, who developed the Cell processor jointly for IBM and Sony, concurs: it was the major paradigm shift represented by on-chip memory caches, not the instruction set that mattered most to RISC architectures.<ref name="refnum9">Capek, Peter (November 2011). From phone discussion.</ref> | ||

RISC architectures, like the Sun SPARC were simply the first to take advantage of the dropping cost of cache memory by placing it in close proximity to the CPU and thus "solving" or at least creatively assuaging one of the fundamental remaining problems of computer engineering--how to fix the huge discrepancy between processor speed and memory access times. Put simply, regardless of instruction set, because of the penalties involved, changes in memory hierarchy dominate issues of microcode. | RISC architectures, like the Sun SPARC were simply the first to take advantage of the dropping cost of cache memory by placing it in close proximity to the CPU and thus "solving" or at least creatively assuaging one of the fundamental remaining problems of computer engineering--how to fix the huge discrepancy between processor speed and memory access times. Put simply, regardless of instruction set, because of the penalties involved, changes in memory hierarchy dominate issues of microcode. | ||

In fact, both Garner and Capek argue that RISC instruction sets have in the last decade become very complex, while CISC instruction sets such as the x86 are now more or less broken down into RISC-type instructions at the CPU level whenever and wherever possible. Additionally, once CISC architectures such as x86 began to incorporate caches directly onto the chip as well, many of the performance advantages of RISC architectures simply disappeared. Like a rising tide, increases in cache sizes and speeds float all boats, as it were. | In fact, both Garner and Capek argue that RISC instruction sets have in the last decade become very complex, while CISC instruction sets such as the x86 are now more or less broken down into RISC-type instructions at the CPU level whenever and wherever possible. Additionally, once CISC architectures such as x86 began to incorporate caches directly onto the chip as well, many of the performance advantages of RISC architectures simply disappeared. Like a rising tide, increases in cache sizes and memory access speeds float all boats, as it were. | ||

Robert Garner cites both this trend and the eventual development of effective register renaming solutions for the x86 (which work around the smaller 8 register limit on that architecture and thus allow for greater parallelism and better out-of-order execution) as the "end of the relevance of the RISC vs. CISC controversy" | Robert Garner cites both this trend and the eventual development of effective register renaming solutions for the x86 (which work around the smaller 8 register limit on that architecture and thus allow for greater parallelism and better out-of-order execution) as the "end of the relevance of the RISC vs. CISC controversy".<ref name="refnum8" /> | ||

===Moore's Law Killed the RISC vs. CISC Controversy=== | ===Moore's Law Killed the RISC vs. CISC Controversy=== | ||

| Line 135: | Line 227: | ||

In the end it is nothing less than Moore's Law that killed the RISC vs. CISC controversy. Advancing technology, particularly the increasing affordability of procurement and manufacture of both transistors and cache memory, and the ability to jam more and more of them into smaller and smaller spaces allowed computer designers of either paradigm to essentially engineer away the perceived benefits of the alternative architecture. | In the end it is nothing less than Moore's Law that killed the RISC vs. CISC controversy. Advancing technology, particularly the increasing affordability of procurement and manufacture of both transistors and cache memory, and the ability to jam more and more of them into smaller and smaller spaces allowed computer designers of either paradigm to essentially engineer away the perceived benefits of the alternative architecture. | ||

I asked Robert Garner whether he thought that once we have hit the wall of Moore's Law, once we reach the physical limit of computer architecture with respect to how densely we can pack a chip, whether at that point the question of RISC vs. CISC would be any more meaningful going forward or would it simply be a legacy question? He felt that even under that type of situation the issues of memory hierarchy would still be the dominating variable with respect to processor performance and that in generally speaking, little if anything would be gained by talking about RISC vs. CISC since they have more or less converged towards each other in terms of fundamental design: cache, register renaming, pipelining, etc | I asked Robert Garner whether he thought that once we have hit the wall of Moore's Law, once we reach the physical limit of computer architecture with respect to how densely we can pack a chip, whether at that point the question of RISC vs. CISC would be any more meaningful going forward or would it simply be a legacy question? He felt that even under that type of situation the issues of memory hierarchy would still be the dominating variable with respect to processor performance and that in generally speaking, little if anything would be gained by talking about RISC vs. CISC since they have more or less converged towards each other in terms of fundamental design: cache, register renaming, pipelining, etc.<ref name="refnum8" /> | ||

Peter Capek also agreed about this convergence of RISC and CISC architectures when I spoke with him over the phone. He cited his own experience working on the Cell Processor, saying that despite being a RISC architecture it too had a fairly complex instruction set as RISC architectures traditionally go. It is the other things put into the architecture of the chip, such as the 8 Synergistic Processing Elements (SPE's), cache, and the design of the bus that define it's performance benchmarks--not the instruction set, which is no longer purely RISC anyway | Peter Capek also agreed about this convergence of RISC and CISC architectures when I spoke with him over the phone. He cited his own experience working on the Cell Processor, saying that despite being a RISC architecture it too had a fairly complex instruction set as RISC architectures traditionally go. It is the other things put into the architecture of the chip, such as the 8 Synergistic Processing Elements (SPE's), cache, and the design of the bus that define it's performance benchmarks--not the instruction set, which is no longer purely RISC anyway.<ref name="refnum9" /> | ||

==Conclusion== | ==Conclusion== | ||

Firmware or microcode was a powerful idea when Wilkes first proposed it in 1951. It remains so today. Whatever small price in performance we pay for firmware is more than made up for by the simplicity and flexibility it gives us in the development of increasingly complex processors and architectures. Moore's Law gives us at least some guarantee that when necessary we can make up that performance loss elsewhere. | Firmware or microcode was a powerful idea when Wilkes first proposed it in 1951. It remains so today. Whatever small price in performance we pay for firmware is more than made up for by the simplicity and flexibility it gives us in the development of increasingly complex processors and architectures. Additionally, Moore's Law gives us at least some guarantee that when necessary we can make up that small performance loss with huge performance gains elsewhere. | ||

At the same time, whether that firmware is called RISC or CISC or whether it, in reality, is (as is increasingly the case) some combination of both hardly matters at all. Such designations may no longer be as significant as the other technologies that accompanied the development of the RISC architecture; that is, caching, pipe-lining, and register renaming to name a few. | |||

But instruction set, itself? CISC vs. RISC? That no longer matters. | |||

==References== | ==References== | ||

==Comments & | <references /> | ||

==Comments, Responses, & Discussions== | |||

<i>Please place your comments or responses here...thanks.</i> | <i>Please place your comments or responses here...thanks.</i> | ||

--[[User:Srterpe|Srterpe]] 14:15, 11 December 2011 (EST) | --[[User:Srterpe|Srterpe]] 14:15, 11 December 2011 (EST) | ||

[[Category:Computer architecture|Instruction]] | |||

Revision as of 19:30, 18 February 2014

About the Author

My name is Steve Terpe. I'm majoring in Computer Science and will be graduating this fall. Currently I'm taking the History of Computing class at San Jose State University (http://www.cs.sjsu.edu/~mak/CS185C/).

I welcome your comments and advice and job offers!

Summary

This article represents the culmination of a semester's worth of research and discussion with Robert Garner, Peter Capek, and Paul McJones, on the varied topics of von Neumann and non-von Neumann machines, alternative architectures, Turing complete vs. non Turing complete languages, CISC, RISC, PowerPC, and the Cell Processor.

Like many would-be historians, I had only a vague idea starting out what this project would finally be about though I knew it should be about alternative architectures in some manner or fashion. As the debate between von Neumann and non-von Neumann architectures is so largely one-sided it can hardly be called a debate at all, I decided to focus on the issue of RISC vs. CISC architectures.

What I found out in the course of my research, was that now this debate too is largely a non-issue, though it was quite important for a brief period of time. That is great news for computer architecture but not so great news for a would-be historian/researcher of the subject.

In the end, I have decided to explain how we arrived where we are: a place where instruction sets no longer matter.

Introduction

This article is largely based on interviews I conducted with both Robert Garner and Peter Capek.

Robert Garner developed both the Xerox Star and the Sun SPARC 4/200 in his years at Xerox and Sun. He was also involved in many more SPARC projects at Sun. He is currently at IBM's Alameda facility and codeveloped the IceCube brick-based 3d scalable server. He manages the group responsible for the RAID stack for GPFS. He is also currently leading the team that has restored two IBM 1401s for the Computer History Museum.

He was gracious enough to spend several hours with me discussing his thoughts on years of developing both CISC and RISC systems and gave me a tour of IBM's research facility.

He was the final speaker on the CS185C Computer History lecture circuit. All the lectures were recorded.

Find it here: http://www.cs.sjsu.edu/~mak/SpeakerSeries/index.html

Peter Capek worked with John Cocke at IBM during the 1970's. Before retiring he worked on the design of the Cell Processor jointly developed between IBM and Sony for the PlayStation 3 home console system. He says that it's the 8 SPEs that give the Cell Processor it's number crunching power not it's RISC instruction set--which is still pretty complex, by the way.

Peter was kind enough to field some questions over the phone on a Sunday afternoon. It is truly too bad that he doesn't live in the Bay Area because I believe the lecture hall would have been packed for one of the designers of the PS3's Cell Processor.

...Of course, I would be remiss to forget:

Paul McJones to whom the final form of this article and project owes a great deal.

Back in the day, Paul worked with John Backus on alternative architectures and languages. He did a lot of other cool stuff as well before finally making his way to Adobe many years later, where he worked on the PixelBender programming language used in Photoshop & After Effects. He also co-authored Elements of Programming. Get it here!

He is currently retired but can sometimes be found at the Computer History Museum. Paul graciously took the time to spend several hours talking to me about his work with John Backus, non-Von Neumann machines, why they were essentially a non-issue, and why my project would be better served by finding some alternative topic. It was great advice.

A note:

I should probably point out that I was extremely lucky to have the opportunity to speak with these three gentlemen. I think I was maybe the only student who was able to find so many experts within the field that had the time and the interest to sit down for an interview. Some students struggled all semester to contact even one expert able to make time for them. In that sense, I was extremely blessed and am deeply grateful.

A final note:

If I have inadvertently attributed to either Robert, Peter, or Paul some statement that seems really wrong, before you flame them please assume that the error rests solely with me.

Historical Beginnings of RISC

Background: Microcode

Most people today are probably more familiar with "firmware" than "microcode." Originally, when Ascher Opler coined the term in 1967, both referred to pretty much the same thing--the contents of the writable control store that defined the instuction set of a given computer[1]. Later, the definition of "firmware" would be expanded to it's current understanding of any microcode that exist, anywhere on the chip, for any purpose, while "microcode" would retain it's original scope but perhaps being too technical would remain largely restricted to the ivory towers of computer engineering and thus outside of the public consciousness.

Indeed the use of the term "microcode" can apparently cause confusion even in modern software engineering and computer science circles--at a recent talk given at San Jose State University SPARC designer and RISC pioneer Dr. Robert Garner spoke at great length about microcode. At the end of his presentation, during the Q&A session, a student asked quite apologetically, "I'm still confused...what is microcode?" [2]

In fact, the entire Q&A session mostly revolved around the need for clarification about "microcode." I think there would have been less confusion among the CS & SE students in attendance if the term "firmware" had been used instead. Most are undoubtedly more familiar with the existence, if not the role, of "firmware" in today's computers.

In any event for clarity and accessibility, and having witnessed the result otherwise, this article will use the term "firmware", but restrict it's meaning to that originally defined by Opler--ie., to mean "microcode."

Development of Firmware

Prior to the advent of firmware, the idea of an instruction set was sort of meaningless. The operation of a given machine's CPU was defined solely by the physical layout of wiring. As there were no stored programs in these early machines reprogramming the machine for a different task was a time consuming operation for early computer programmers. Later, the first stored program machines were developed, but in these early cases the control units of the CPUs were still "hard-wired."

In 1951 Maurice Wilkes of Cambridge University proposed what he called "microprogramming." His idea was fundamentally simple--that the CPU could be more generally designed and that it's control unit could be defined in a program store in the same way as executable "program" code was. This microcode would define the instruction set that would be available to the programmer of a particular CPU. Microcode could be added or removed as necessary. Microcode would allow the instruction set of a computer to be altered or edited without a prerequisite change in hardware, wiring, or soldering. This was an intriguing idea for a variety of reasons, the most important of which was purely economic: such a scenario would significantly reduce development costs and give programmers and computer designers much greater flexibility in design. Unfortunately, this was to remain, outside of a few academic attempts, largely theoretical for at least the next decade.

Robert Garner cites IBM's System/360 family of computers as essentially the first successful attempt to commercially develop a CPU with control units defined in firmware.[2] This decision allowed IBM to maintain a great deal of compatibility between the individual models of the System/360 series despite the huge difference in performance and pricing between high-end and low-end system packages. Commercially this was more or less a rout for IBM as customers would tend to migrate from one pricing package to the next rather than purchase a competitor's system when their computing needs changed. This was in order to take advantage of System/360 cross-compatibility--all the programs one had written on 360/30 could run on the 40, the 62, the 65 and so on.

By 1977 when IBM stopped selling the System/360 there had been a total of 14 different models at various price points. Obviously, the outrageous success of the System/360 was in large part due to the cross-compatibility provided by the System/360 firmware as well as the fact that both field repairs and field upgrades on these machines could often be as simple as switching out the control stores--in effect the first of many happy "firmware upgrades." By the end of the 1960's firmware was firmly established as the direction of computer architecture.

Only One Problem...

The System/360 series of machines were a huge commercial success for IBM, but they were a far cry from the fastest computers being built at the time. The problem with firmware was essentially speed. This extra firmware layer that mediated between program and hardware was incurring a significant speed cost over what hard-wired CPU's were capable of.

In 1975, while working on the IBM 801 and looking at the problem of how to make IBM's firmware less painful from a performance point of view, John Cocke proposed what would essentially become the "Reduced Instruction Set Computer" or "RISC" architecture.

Cocke and his research team had spent some time analyzing compiled code produced by IBM's System/370 machines (successors to the System/360s) and had noticed the troubling tendency of machine compilers to not necessarily select the best sequence of instructions to achieve some given task. Fundamentally, his idea was that the instruction set that was available to compilers was "too rich" in the sense that it was hard for compilers to choose the best instruction for a give operation. Cocke therefore proposed that the available instruction set be reduced down to "a set of primitives carefully chosen to exploit the fastest component of the storage hierarchy and provide instructions that can be generated easily by compilers"[3]

A study of the code compiled on these machines gave John Cocke important insights into the development of RISC architecture (Archival Image, courtesy of IBM).]] It is important to note that despite the ambiguity of language "reduced" does not necessarily imply "less." This is reduced in the cooking sense of "letting something reduce down". This is often a point of confusion about RISC, but it is perfectly acceptable for a RISC machine to have even have more available instructions than a non-RISC machine, provided that those instructions have been boiled down to their most bare-bones primitive operations--i.e., such that each instruction costs very few and, preferably, only one CPU cycle.

Fundamentally, what RISC promised would be a simpler instruction set that would be easier to optimize and pipeline vis-a-vis hard-wiring directly on the chip--such that there would be a symbiosis between instruction set and hardware. This became almost a kind of reversal or inversion of what Stanford's William McKeeman called for in 1967, when he suggested that more effort be spent towards "language directed" alternative architectures.[4] RISC, as envisioned by Cocke, is an "architecture directed" microcode control language.

Additionally, compiler's would be able to compile code for such a machine that was better, faster, and more efficient because they would, essentially, have a reduced set of choices--and therefore less opportunity to be overwhelmed by instructions that perhaps were really only created and intended for the convenience of human programmers in the first place.

With the advent of RISC anything that was not RISC came to be called "CISC", that is "Complex Instruction Set Computer" (such as the x86 architecture). And the prevailing meme (especially on the RISC side of the house) was that RISC was "fast" while CISC was "slow." Almost out of the gate, the burning $64,000 question became: which of these competing firmware architectures is actually better?

What Do These Instruction Sets Look Like?

Comparing Instruction Sets

Before we answer the question of which type of instruction set is better let's take a closer look at instruction sets: What is a complex instruction set? What is a reduced instruction set? Let's look at some examples.

Consider the ADD instruction:

On x86 processors (CISC) it looks like this:

ADD dest,src

Adds "src" to "dest" and replacing the original contents of "dest".

Both operands are binary.

Clocks Size

Operands 808x 286 386 486 Bytes

reg,reg 3 2 2 1 2

mem,reg 16+EA 7 7 3 2-4 (W88=24+EA)

reg,mem 9+EA 7 6 2 2-4 (W88=13+EA)

reg,immed 4 3 2 1 3-4

mem,immed 17+EA 7 7 3 3-6 (W88=23+EA)

accum,immed 4 3 2 1 2-3

*Note: "EA" is Effective Address

(Source: Smith, Zack).[5]

We can see here that there are six different possible ADD instructions that can be called in this sample CISC architecture, with the difference largely being the respective location of either destination or source and in some cases huge clock penalties for various arrangements.

For the compiler, the difficulty is to figure out which instruction would be the most efficient to use, given obviously, what it has just done and what it needs to do next or even farther down cycle. Obviously, the situation is made even worse if you have a 63 pass compiler with only 16K of memory available to do this.

Additionally, it should be noted that, most of these instructions were developed to make life easier for human programmers who don't necessarily want to be bothered with every load and store, hence the ability to add directly from or into a memory location, etc.

Let us look at the same instruction on the PowerPC (RISC) architecture...

(Image Source, Delft University of Technology, Netherlands).[6]

In this architecture we can see that we have some various options regarding flags that we can set here if need be. But for the most part their is only one add operation: A value in one register is added to the value stored in another register and the result is placed into a third register.

The add instruction has been "reduced down" to it's most primitive operation--it does nothing more or less than add. If you want to move the result around later, you need to call a different instruction. This is quite different than the CISC instructions above, that allowed us to merge store operations directly into the add operation. According to RISC theory, these reduced instructions should both individually require less execution cycles and, potentially, might also use slightly less cycles as a program whole.

In any event, because the instructions are broken up into smaller primitives, compilers will have an easier time generating code that will get program execution from A to B as quickly as possible.

What This Looks Like in Assembly Language

Here is assembly code for a simple "Hello World" program, first for ia32, then for ppc32. These code samples are taken from IBM's online developerWorks Library.[7] For those who would like to peruse the whole documentation by Hollis Blanchard you can find it here.

HelloWorld: ia32 assembly

- .data # section declaration

- msg:

- .string "Hello, world!\n"

- len = . - msg # length of our dear string

- .text # section declaration

- # we must export the entry point to the ELF linker or

- .global _start # loader. They conventionally recognize _start as their

- # entry point. Use ld -e foo to override the default.

- _start:

- # write our string to stdout

- movl $len,%edx # third argument: message length

- movl $msg,%ecx # second argument: pointer to message to write

- movl $1,%ebx # first argument: file handle (stdout)

- movl $4,%eax # system call number (sys_write)

- int $0x80 # call kernel

- # and exit

- movl $0,%ebx # first argument: exit code

- movl $1,%eax # system call number (sys_exit)

- int $0x80 # call kernel

HelloWorld: ppc32 assembly

- .data # section declaration - variables only

- msg:

- .string "Hello, world!\n"

- len = . - msg # length of our dear string

- .text # section declaration - begin code

- .global _start

- _start:

- # write our string to stdout

- li 0,4 # syscall number (sys_write)

- li 3,1 # first argument: file descriptor (stdout)

- #second argument: pointer to message to write

- lis 4,msg@ha # load top 16 bits of &msg

- addi 4,4,msg@l # load bottom 16 bits

- li 5,len # third argument: message length

- sc # call kernel

- # and exit

- li 0,1 # syscall number (sys_exit)

- li 3,1 # first argument: exit code

- sc # call kernel

First, I do apologize for the formatting apparently this wiki does not yet fully support the "code" formatting tag. Or I'm doing it wrong. :(

At any rate, if you're like me you probably noticed that if you were to remove all comments these are really pretty similar in terms of actual lines of code but that, in terms of brevity, ia32 has a slight advantage. The instructions that are writing out the actual string take 6 lines of code on the ppc32 as opposed to five on the ia32. Both load the three arguments, $stdout, $msg, and $len into three registers and then make a system call to the kernel for "sys_write" then exit.

This, of course, is a trivial example and writing out a single string is a simple thing--five lines of code vs. six lines of code may seem like a meaningless distinction. That is correct, but even this trivial example illustrates the point that if one was coding something more complex by hand, with dozens or hundreds of loads, moves, and stores, one would much prefer the instruction set that that was geared toward code brevity, all other things being equal.

That being said, if machine compilers are taking over the task of writing assembly code (as was increasingly the case when John Cocke was studying the issue in the 1970's), then we really are not particularly sympathetic to how many lines of code the compiler has to write so long as it is fast, efficient, and if not better then at least not dumber than the code a human would write.

Do Instruction Sets Matter?

Performance Is About Memory Hierarchy, Not Instruction Set

Having looked briefly at some samples of both the RISC and CISC instruction sets let us return now to the question at hand: which of these competing firmware architectures is actually better?

The answer, it turns out, is neither.

For a few years, yes, it seemed like RISC architectures such as SPARC really were delivering on their promise and outperforming their CISC machine contemporaries. But Robert Garner, one of the original designer of the SPARC argues, compellingly, that this is more of a case of faulty correlation. The performance that RISC architectures were achieving was attributed to the nature of the instruction set, but in reality it was something else entirely--the increasing affordability of on-chip memory caches which were first implemented on RISC machines.[8] When queried on the same issue Peter Capek, who developed the Cell processor jointly for IBM and Sony, concurs: it was the major paradigm shift represented by on-chip memory caches, not the instruction set that mattered most to RISC architectures.[9]

RISC architectures, like the Sun SPARC were simply the first to take advantage of the dropping cost of cache memory by placing it in close proximity to the CPU and thus "solving" or at least creatively assuaging one of the fundamental remaining problems of computer engineering--how to fix the huge discrepancy between processor speed and memory access times. Put simply, regardless of instruction set, because of the penalties involved, changes in memory hierarchy dominate issues of microcode.

In fact, both Garner and Capek argue that RISC instruction sets have in the last decade become very complex, while CISC instruction sets such as the x86 are now more or less broken down into RISC-type instructions at the CPU level whenever and wherever possible. Additionally, once CISC architectures such as x86 began to incorporate caches directly onto the chip as well, many of the performance advantages of RISC architectures simply disappeared. Like a rising tide, increases in cache sizes and memory access speeds float all boats, as it were.

Robert Garner cites both this trend and the eventual development of effective register renaming solutions for the x86 (which work around the smaller 8 register limit on that architecture and thus allow for greater parallelism and better out-of-order execution) as the "end of the relevance of the RISC vs. CISC controversy".[8]

Moore's Law Killed the RISC vs. CISC Controversy

In the end it is nothing less than Moore's Law that killed the RISC vs. CISC controversy. Advancing technology, particularly the increasing affordability of procurement and manufacture of both transistors and cache memory, and the ability to jam more and more of them into smaller and smaller spaces allowed computer designers of either paradigm to essentially engineer away the perceived benefits of the alternative architecture.

I asked Robert Garner whether he thought that once we have hit the wall of Moore's Law, once we reach the physical limit of computer architecture with respect to how densely we can pack a chip, whether at that point the question of RISC vs. CISC would be any more meaningful going forward or would it simply be a legacy question? He felt that even under that type of situation the issues of memory hierarchy would still be the dominating variable with respect to processor performance and that in generally speaking, little if anything would be gained by talking about RISC vs. CISC since they have more or less converged towards each other in terms of fundamental design: cache, register renaming, pipelining, etc.[8]

Peter Capek also agreed about this convergence of RISC and CISC architectures when I spoke with him over the phone. He cited his own experience working on the Cell Processor, saying that despite being a RISC architecture it too had a fairly complex instruction set as RISC architectures traditionally go. It is the other things put into the architecture of the chip, such as the 8 Synergistic Processing Elements (SPE's), cache, and the design of the bus that define it's performance benchmarks--not the instruction set, which is no longer purely RISC anyway.[9]

Conclusion

Firmware or microcode was a powerful idea when Wilkes first proposed it in 1951. It remains so today. Whatever small price in performance we pay for firmware is more than made up for by the simplicity and flexibility it gives us in the development of increasingly complex processors and architectures. Additionally, Moore's Law gives us at least some guarantee that when necessary we can make up that small performance loss with huge performance gains elsewhere.

At the same time, whether that firmware is called RISC or CISC or whether it, in reality, is (as is increasingly the case) some combination of both hardly matters at all. Such designations may no longer be as significant as the other technologies that accompanied the development of the RISC architecture; that is, caching, pipe-lining, and register renaming to name a few.

But instruction set, itself? CISC vs. RISC? That no longer matters.

References

- ↑ Opler, Ascher (January 1967). "Fourth-Generation Software". Datamation 13 (1): 22–24.

- ↑ 2.0 2.1 >Garner, Robert (December 2011). Lecture given at San Jose State University.

- ↑ Cocke, John (January 2000). "The Evolution of RISC Technology at IBM". IBM Journal of Research and Development 44 (1.2).

- ↑ McKeeman, W.M. (November 1967). "Language Directed Computer Design". AFIPS Fall '67 Proceedings of the November 14-16, 1967, Joint Computer Conference.

- ↑ Smith, Zack (2005-2011). "The Intel 8086 / 8088/ 80186 / 80286 / 80386 / 80486 Instruction Set." HTML versions of Intel Documents in Public Domain. Source: http://zsmith.co/intel/intel.html M

- ↑ Vakken (1999-2011). "PowerPC Instruction Set." Delft University of Technology, Netherlands. Source: http://pds.twi.tudelft.nl/vakken/in101/labcourse/instruction-set/

- ↑ Blanchard, Hollis (July, 2002). "PowerPC Assembly: Introduction to assembly on the PowerPC." IBM developerWorks Library. Source: http://www.ibm.com/developerworks/library/l-ppc/

- ↑ 8.0 8.1 8.2 Garner, Robert (November 2011). From interview discussion.

- ↑ 9.0 9.1 Capek, Peter (November 2011). From phone discussion.

Comments, Responses, & Discussions

Please place your comments or responses here...thanks.

--Srterpe 14:15, 11 December 2011 (EST)