Why Instruction Sets No Longer Matter: Difference between revisions

| Line 35: | Line 35: | ||

In 1975, while working on the IBM 801 and looking at the problem of how to make IBM's firmware less painful from a performance point of view, John Cocke proposed what would essentially become the "Reduced Instruction Set Computer" or "RISC" architecture. | In 1975, while working on the IBM 801 and looking at the problem of how to make IBM's firmware less painful from a performance point of view, John Cocke proposed what would essentially become the "Reduced Instruction Set Computer" or "RISC" architecture. | ||

Cocke and his research team had spent some time analyzing compiled code produced by IBM's System/370 machines (successors to the System/360s) and had noticed the troubling tendency of machine compilers to not necessarily select the best sequence of instructions to achieve some given task. Fundamentally, his idea was that the instruction set that was available to compilers was "too rich" in the sense that it was hard for compilers to choose the best instruction for a give operation | Cocke and his research team had spent some time analyzing compiled code produced by IBM's System/370 machines (successors to the System/360s) and had noticed the troubling tendency of machine compilers to not necessarily select the best sequence of instructions to achieve some given task. Fundamentally, his idea was that the instruction set that was available to compilers was "too rich" in the sense that it was hard for compilers to choose the best instruction for a give operation. Cocke therefore proposed that the available instruction set be reduced down to "a set of primitives carefully chosen to exploit the fastest component of the storage hierarchy and provide instructions that can be generated easily by compilers."[3]. It is important to note that despite the ambiguity of language "reduced" does not necessarily imply "less." This is reduced in the cooking sense of "letting something reduce down". This is often a point of confusion about RISC, but it is perfectly acceptable for a RISC machine to have even have <i>more</i> available instructions than a non-RISC machine, provided that those instructions have been boiled down to their most bare-bones primitive operation--i.e., such that the instruction costs only 1 CPU cycle. | ||

===References=== | ===References=== | ||

Revision as of 23:34, 10 December 2011

Why Instruction Sets No Longer Matter

About the Author

My name is Steven R. Terpe. I'm a student in the History of Computing class at San Jose State University (http://www.cs.sjsu.edu/~mak/CS185C/). This is a work in progress that will turn into a final article by the end of the semester.

I welcome your comments and advice!

Summary

Background: Microcode

Most people today are probably more familiar with "firmware" than "microcode." Originally, when Aschler Opler coined the term in 1967, both referred to pretty much the same thing--the contents of the writable control store that defined the instuction set of a given computer[1]. Later, the definition of "firmware" would be expanded to it's current understanding of any microcode that exist, anywhere on the chip, for any purpose, while "microcode" would retain it's original scope but perhaps being too technical would remain largely restricted to the ivorty towers of computer engineering and thus outside of the public consciousness.

Indeed the use of the over-specialized and semi-archaic term "microcode" can cause confusion even in the modern software engineering and computer science circles--at a recent talk at San Jose State RISC pioneer Dr. Robert Garner spoke at great length about microcode. At the end of his presentation, during the Q&A session, a student asked quite bluntly, "I'm still confused...what is microcode?" In fact, the entire Q&A session mostly revolved around the need for clarification about "microcode." I have no doubt that if Dr. Garner had merely interchanged one word for the other there would have been little confusion among the CS & SE students in attendance about the role of "firmware" in today's computers.

In any event for clarity and accessibility, and having witnessed the result other wise, this article will use the term "firmware", but restrict it's meaning to that originally defined by Opler--ie., to mean "microcode."

Development of Firmware

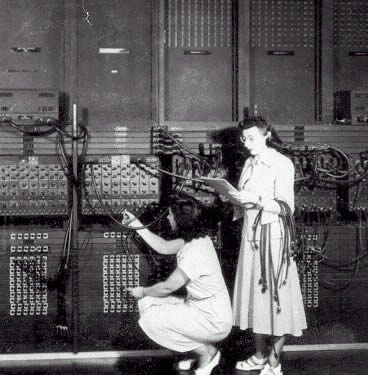

Prior to the advent of firmware, the idea of an instruction set was sort of meaningless. The operation of a given machine's CPU was defined solely by the physical layout of wiring. As there were no stored programs in these early machines reprogramming the machine for a different task was a time consuming operation for early computer programmers. Later, the first stored program machines were developed, but in these early cases the control units of the CPUs were still "hard-wired."

In 1951 Maurice Wilkes of Cambridge University proposed what he called "microprogramming." His idea was fundamentally simple--that the CPU could be more generally designed and that it's control unit could be defined in a program store in the same way as executable "program" code was. This microcode would define the instruction set that would be available to the programmer of a particular CPU. Microcode could be added or removed as necessary. Microcode would allow the instruction set of a computer to be altered or edited without a prerequisite change in hardware, wiring, or soldering. This was an intriguing idea for a variety of reasons, the most important of which was purely economic: such a scenario would significantly reduce development costs and give programmers and computer designers much greater flexibility in design. Unfortunately, this was to remain, outside of a few academic attempts, largely theoretical for at least the next decade.

Robert Garner cites IBM's System/360 family of computers as essentially the first commercial attempt to successfully develop a CPU with control units defined in firmware.[2] This decision allowed IBM to maintain a great deal of compatibility between the individual models of the System/360 series despite the huge difference in performance and pricing between high-end and low-end system packages. Commercially this was more or less a rout for IBM as customers would tend to migrate from one pricing package to the next rather than purchase a competitor's system when their computing needs changed. This was in order to take advantage of System/360 cross-compatibility--all the programs one had written on 360/30 could run on the 40, the 62, the 65 and so on.

By 1977 when IBM stopped selling the System/360 there had been a total of 14 different models at various price points. Obviously, the outrageous success of the System/360 was in large part due to the cross-compatibility provided by the System/360 firmware as well as the fact that both field repairs and field upgrades on these machines could often be as simple as switching out the control stores--in effect the first of many happy "firmware upgrades." By the end of the 1960's firmware was firmly established as the direction of computer architecture.

Only One Problem...

The System/360 series of machines were a huge commercial success for IBM, but they were a far cry from the fastest computers being built at the time. The problem with firmware was essentially speed. This extra firmware layer that mediated between program and hardware was incurring a significant speed cost over what more specially-designed CPU's were capable of doing.

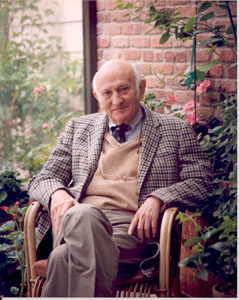

In 1975, while working on the IBM 801 and looking at the problem of how to make IBM's firmware less painful from a performance point of view, John Cocke proposed what would essentially become the "Reduced Instruction Set Computer" or "RISC" architecture.

Cocke and his research team had spent some time analyzing compiled code produced by IBM's System/370 machines (successors to the System/360s) and had noticed the troubling tendency of machine compilers to not necessarily select the best sequence of instructions to achieve some given task. Fundamentally, his idea was that the instruction set that was available to compilers was "too rich" in the sense that it was hard for compilers to choose the best instruction for a give operation. Cocke therefore proposed that the available instruction set be reduced down to "a set of primitives carefully chosen to exploit the fastest component of the storage hierarchy and provide instructions that can be generated easily by compilers."[3]. It is important to note that despite the ambiguity of language "reduced" does not necessarily imply "less." This is reduced in the cooking sense of "letting something reduce down". This is often a point of confusion about RISC, but it is perfectly acceptable for a RISC machine to have even have more available instructions than a non-RISC machine, provided that those instructions have been boiled down to their most bare-bones primitive operation--i.e., such that the instruction costs only 1 CPU cycle.

References

1.Opler, Ascher (January 1967). "Fourth-Generation Software". Datamation 13 (1): 22–24.

2.Garner, Robert (December 2011). Lecture given at San Jose State University.

3.Cocke, John (January 2000). "The Evolution of RISC Technology at IBM". IBM Journal of Research and Development 44 (1.2): Abstract.

John cocke.jpg