Transistors and the Computer Revolution

Transistors and the Computer Revolution

<pageby nominor="false" comments="false"></pageby>;

If you ask someone who lived during the late 1950s or 1960s what they associated with the transistor, there is a good chance they’ll say “transistor radio.” And with good reason. The transistor radio revolutionized the way people listened to music, because it made radios smaller and portable. But, nice as a hand-held radio is, the real transistor revolution was taking place in the field of computers.

In a computer the transistor is usually used as a switch rather than an amplifier. Thousands and later tens of thousands of these switches were needed to make up the complicated logic circuits that allowed computers to compute. Unlike the earlier electron tubes (often called vacuum tubes), transistors allowed the design of much smaller, more reliable computers—they also addressed the seemingly insatiable need for speed.

The speed at which a computer can perform calculations depends heavily on the speed at which transistors can switch from “on” to “off.” In other words, the faster the transistors, the faster the computer. Researchers found that making transistors switch faster required that the transistors themselves be smaller and smaller, because of the way electrons move around in semiconductors—if there is less material to move through, the electrons can move faster. By the 1970s, mass-production techniques allowed nearly microscopic transistors to be produced by the thousands on round silicon wafers. These were cut up into individual pieces and mounted inside a package for easier handling and assembly. The packaged, individual transistors were then wired into circuits along with other components such as resistors and capacitors.

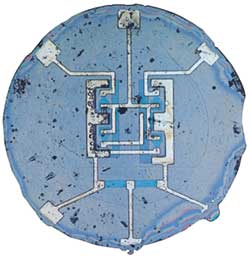

This device, developed by Robert Noyce in the late 1950s, was the first commercially available integrated circuit. Courtesy: Fairchild Semiconductor.

As computers were produced in larger numbers, some kinds of logic circuits became fairly standardized. Engineers reasoned that standard circuits should be designed as units, in order to make them more compact. There were many proposals for doing this, but British engineer G. W. A. Dummer proposed the idea of making the entire circuit directly on a silicon wafer, instead of assembling the circuits from individual transistors and other components. Two engineers, Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor, invented such circuits—called integrated circuits—in 1958.

Intel cofounder Gordon Moore talks about the effects of the integrated circuit on the computer industry. From the PBS documentary TRANSISTORIZED! copyright 1999, Twin Cities Public Television, Inc. & ScienCentral, Inc. Used with permission.

The first integrated circuits (ICs) were very simple and merely demonstrated the concept. But the idea of fabricating an entire circuit on a silicon wafer or “chip” with one process was a real breakthrough. Integrated circuits were so expensive that the first ones were purchased only by the military, which could justify the cost for top-notch performance. A little later, however, the integrated circuit would be mass-produced (largely to meet the needs of NASA’s Apollo program and the United States’ missile programs). When this happened it would revolutionize the design of computers.

<rating comment="false"> Well Written? 1 (No) 2 3 4 5 (Yes) </rating> <rating comment="false"> Informative? 1 (No) 2 3 4 5 (Yes) </rating> <rating comment="false"> Accurate? 1 (No) 2 3 4 5 (Yes) </rating>