History of Lossless Data Compression Algorithms: Difference between revisions

Rihamichael (talk | contribs) No edit summary |

Rihamichael (talk | contribs) No edit summary |

||

| Line 38: | Line 38: | ||

#Determine the probability of each symbol using the symbol count. | #Determine the probability of each symbol using the symbol count. | ||

#Sort the symbols by probability, with the most probable first. | #Sort the symbols by probability, with the most probable first. | ||

#Generate leaf nodes for each symbol.<br> | #Generate leaf nodes for each symbol.<br> | ||

#Divide the list in two while keeping the probabilities of the left branch roughly equal to those on the right branch. | #Divide the list in two while keeping the probabilities of the left branch roughly equal to those on the right branch. | ||

#Append a 0 to symbols on the left, and 1 to symbols on the right. | #Append a 0 to symbols on the left, and 1 to symbols on the right. | ||

| Line 56: | Line 56: | ||

*Prepend 0 and 1 respectively to the nodes’ codes.<br> | *Prepend 0 and 1 respectively to the nodes’ codes.<br> | ||

*Create a new node with value equal to the sum of the nodes’ probability.<br> | *Create a new node with value equal to the sum of the nodes’ probability.<br> | ||

*Assign the first node to the left branch and the second node to the right branch. | *Assign the first node to the left branch and the second node to the right branch. | ||

*Add the node to the queue<br> | *Add the node to the queue<br> | ||

6. The last node remaining in the queue is the root of the Huffman tree. [10] | 6. The last node remaining in the queue is the root of the Huffman tree. [10] | ||

==== Arithmetic Coding <br> ==== | ==== Arithmetic Coding <br> ==== | ||

| Line 73: | Line 73: | ||

Input: AAABBCCCCDEEEEEEAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA | Input: AAABBCCCCDEEEEEEAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA | ||

Output: 3ABB4CD6E38A<br> | Output: 3ABB4CD6E38A<br> | ||

== Compression Algorithms<br> == | == Compression Algorithms<br> == | ||

| Line 203: | Line 203: | ||

==== <br> ==== | ==== <br> ==== | ||

More to come in the next two days. | More to come in the next two days.<br><br> | ||

[[Category:Data_compression]] | [[Category:Data_compression]] | ||

Revision as of 10:14, 11 December 2011

Introduction

There are two major categories of compression algorithms: lossy and lossless. Lossy compression algorithms involve the reduction of a file’s size usually by removing small details that require a large amount of data to store at full fidelity. In lossy compression, it is impossible to restore the original file due to the removal of essential data. Lossy compression is most commonly used to store image and audio data, and while it can achieve very high compression ratios through data removal, it is not covered in this article. Lossless data compression is the size reduction of a file, such that a decompression function can restore the original file exactly with no loss of data. Lossless data compression is used ubiquitously in computing, from saving space on your personal computer to sending data over the web, communicating over a secure shell, or viewing a PNG or GIF image.

The basic principle that lossless compression algorithms work on is that any non-random file will contain duplicated information that can be condensed using statistical modeling techniques that determine the probability of a character or phrase appearing. These statistical models can then be used to generate codes for specific characters or phrases based on their probability of occurring, and assigning the shortest codes to the most common data. Such techniques include entropy encoding, run-length encoding, and compression using a dictionary. Using these techniques and others, an 8-bit character or a string of such characters could be represented with just a few bits resulting in a large amount of redundant data being removed.

History

Data compression has only played a significant role in computing since the 1970s, when the Internet was becoming more popular and the Lempel-Ziv algorithms were invented, but it has a much longer history outside of computing. Morse code, invented in 1838, is the earliest instance of data compression in that the most common letter s in the English language such as “e” and “t” are given shorter Morse codes. Later, as mainframe computers were starting to take hold in 1949, Claude Shannon and Robert Fano invented a systematic method to assign codes based on a given block of data [1].

Two years later, David Huffman was studying information theory at MIT and had a class with Robert Fano. Fano gave the class the choice of writing a term paper or taking a final exam. Huffman chose the term paper, which was to be on finding the most efficient method of binary coding. After working for months and failing to come up with anything, Huffman was about to throw away all his work and start studying for the final exam in lieu of the paper. It was at that point that he had an epiphany, figuring out a very similar yet more efficient technique to Shannon-Fano coding. The key difference between Shannon-Fano coding and Huffman coding is that in the former the probability tree is built bottom-up, creating a suboptimal result, and in the latter it is built top-down [2].

Early implementations of Shannon-Fano and Huffman coding were done using hardware and hardcoded codes. It was not until the 1970s and the advent of the Internet and online storage that software compression was implemented that Huffman codes were dynamically generated based on the input data [1]. Later, in 1977, Abraham Lempel and Jacob Ziv published their groundbreaking LZ77 algorithm, the first algorithm to use a dictionary to compress data. More specifically, LZ77 used a dynamic dictionary oftentimes called a sliding window [3]. In 1978, the same duo published their LZ78 algorithm which also uses a dictionary; unlike LZ77, this algorithm parses the input data and generates a static dictionary rather than generating it dynamically [4].

Legal Issues

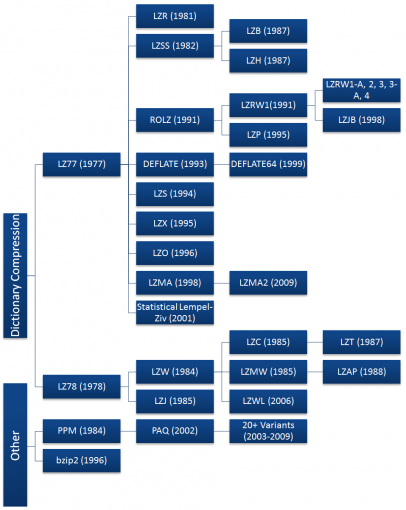

Both the LZ77 and LZ78 algorithms grew rapidly in popularity, spawning many variants shown in the diagram below. Most of these algorithms have died off since their invention, with just a handful seeing widespread use today including DEFLATE, LZMA, and LZX. Most of the commonly used algorithms are derived from the LZ77 algorithm. This is not due to technical superiority, but because LZ78 algorithms became patent-encumbered after Unisys patented the derivative LZW algorithm in 1984 and began suing software vendors, server admins, and even end users for using the GIF format without a license [5][6].

At the time, the UNIX compress utility used a very slight modification of the LZW algorithm called LZC and was later discontinued due to patent issues. Other UNIX developers also began to deviate from using the LZW algorithm in favor or open source ones. This led the UNIX community to adopt the DEFLATE-based gzip and the Burrows-Wheeler Transform-based bzip2 formats. In the long run, this was a benefit for the UNIX community because both the gzip and bzip2 formats nearly always achieve significantly higher compression ratios than the LZW format [6]. The patent issues surrounding LZW have since subsided, as the patent on the LZW algorithm expired in 2003 [5]. Despite this, the LZW algorithm has largely been replaced and is only commonly used in GIF compression. There have also been some LZW derivatives since then but they do not enjoy widespread use either and LZ77 algorithms remain dominant.

Another legal battle erupted in 1993 regarding the LZS algorithm. LZS was developed by Stac Electronics for use in disk compression software such as Stacker. Microsoft used the LZS algorithm in developing disk compression software that was released with MS-DOS 6.0 that purported to double the capacity of a hard drive. When Stac Electronics found out its intellectual property was being used, it filed suit against Microsoft. Microsoft was later found guilty of patent infringement and ordered to pay Stac Electronics $120 million in damages minus $13.6 million awarded in a countersuit finding that Microsoft’s infringement was not willful [7]. Although Stac Electronics v. Microsoft had a large judgment, it did not impede the development of Lempel-Ziv algorithms as the LZW patent dispute did. The only consequence seems to be that LZS has not been forked into any new algorithms.

Compression Techniques

Many different techniques are used to compress data. Most compression techniques cannot stand on their own, but must be combined together to form a compression algorithm. Those that can stand alone are often more effective when joined together with other compression techniques. Most of these techniques fall under the category of entropy coders, but there are others such as Run-Length Encoding and the Burrows-Wheeler Transform that are also commonly used.

Entropy Encoding

Entropy in data compression means the smallest number of bits needed, on average, to represent a symbol or literal. A basic entropy coder combines a statistical model and a coder. The input file is parsed and used to generate a statistical model that consists of the probabilities of a given symbol appearing. Then, the coder will use the statistical model to determine what bit-or-bytecodes to assign to each symbol such that the most common symbols have the shortest codes and the least common symbols have the longest codes [8].

Shannon-Fano Coding

This is one of the earliest compression techniques, invented in 1949 by Claude Shannon and Robert Fano. This technique involves generating a binary tree to represent the probabilities of each symbol occurring, where each branch to the left represents a 0 and each branch to the right represents a 1. For example, if “A” is two branches to the left and one to the right its code would be “001.” Shannon-Fano coding does not always produce optimal codes due to the way it builds the binary tree from the bottom up. For this reason, Huffman coding is used instead as it will generate an optimal code for any given input.

The algorithm to generate Shannon-Fano codes is fairly simple:

- Parse the input, counting the occurrence of each symbol.

- Determine the probability of each symbol using the symbol count.

- Sort the symbols by probability, with the most probable first.

- Generate leaf nodes for each symbol.

- Divide the list in two while keeping the probabilities of the left branch roughly equal to those on the right branch.

- Append a 0 to symbols on the left, and 1 to symbols on the right.

- Recursively apply steps 5 and 6 until each node is a leaf in the tree. [9]

Huffman Coding

Huffman Coding is another variant of entropy coding that works in a very similar manner to Shannon-Fano Coding, but the binary tree is built from the top down to generate an optimal result.

The algorithm to generate Huffman codes shares its first steps with Shannon-Fano:

- Parse the input, counting the occurrence of each symbol.

- Determine the probability of each symbol using the symbol count.

- Sort the symbols by probability, with the most probable first.

- Generate leaf nodes for each symbol, including P, and add them to a queue.

- While (Nodes in Queue > 1)

- Remove the two lowest probability nodes from the queue.

- Prepend 0 and 1 respectively to the nodes’ codes.

- Create a new node with value equal to the sum of the nodes’ probability.

- Assign the first node to the left branch and the second node to the right branch.

- Add the node to the queue

6. The last node remaining in the queue is the root of the Huffman tree. [10]

Arithmetic Coding

Range Coding

Run-Length Coding

Run-Length Coding is a very simple compression technique that replaces strings of more than two of the same character with a number followed by a letter representing the original data. The reason it only replaces strings of more than two characters is that replacing an input such as “AA” with “2A” is still the same length. RLE is useful for highly-redundant data, indexed images with many pixels of the same color in a row, or in combination with other compression techniques like the Burrows-Wheeler Transform.

Here is a quick example of RLE:

Input: AAABBCCCCDEEEEEEAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

Output: 3ABB4CD6E38A

Compression Algorithms

Sliding Window Algorithms

LZ77

blah

LZR

blah

DEFLATE

blah

DEFLATE64

blah

LZSS

blah

LZH

blah

LZB

blah

ROLZ

blah

LZP

blah

LZRW

blah

LZJB

blah

LZS

blah

LZX

blah

LZP

blah

LZO

blah

LZMA

blah

LZMA2

blah

Statistical Lempel-Ziv

Dictionary Algorithms

LZ78

blah

LZW

blah

LZMW

blah

LZAP

blah

LZWL

blah

LZJ

blah

LZFG

blah

Non-dictionary Algorithms

PPM

blah

bzip2

blah

PAQ

blah

PAQ8O

blah

ZPAQ

blah

More to come in the next two days.