History of Lossless Data Compression Algorithms: Difference between revisions

Rihamichael (talk | contribs) No edit summary |

No edit summary |

||

| (49 intermediate revisions by 3 users not shown) | |||

| Line 3: | Line 3: | ||

There are two major categories of compression algorithms: lossy and lossless. Lossy compression algorithms involve the reduction of a file’s size usually by removing small details that require a large amount of data to store at full fidelity. In lossy compression, it is impossible to restore the original file due to the removal of essential data. Lossy compression is most commonly used to store image and audio data, and while it can achieve very high compression ratios through data removal, it is not covered in this article. Lossless data compression is the size reduction of a file, such that a decompression function can restore the original file exactly with no loss of data. Lossless data compression is used ubiquitously in computing, from saving space on your personal computer to sending data over the web, communicating over a secure shell, or viewing a PNG or GIF image. | There are two major categories of compression algorithms: lossy and lossless. Lossy compression algorithms involve the reduction of a file’s size usually by removing small details that require a large amount of data to store at full fidelity. In lossy compression, it is impossible to restore the original file due to the removal of essential data. Lossy compression is most commonly used to store image and audio data, and while it can achieve very high compression ratios through data removal, it is not covered in this article. Lossless data compression is the size reduction of a file, such that a decompression function can restore the original file exactly with no loss of data. Lossless data compression is used ubiquitously in computing, from saving space on your personal computer to sending data over the web, communicating over a secure shell, or viewing a PNG or GIF image. | ||

The basic principle that lossless compression algorithms work on is that any non-random file will contain duplicated information that can be condensed using statistical modeling techniques that determine the probability of a character or phrase appearing. These statistical models can then be used to generate codes for specific characters or phrases based on their probability of occurring, and assigning the shortest codes to the most common data. Such techniques include entropy encoding, run-length encoding, and compression using a dictionary. Using these techniques and others, an 8-bit character or a string of such characters could be represented with just a few bits resulting in a large amount of redundant data being removed. | The basic principle that lossless compression algorithms work on is that any non-random file will contain duplicated information that can be condensed using statistical modeling techniques that determine the probability of a character or phrase appearing. These statistical models can then be used to generate codes for specific characters or phrases based on their probability of occurring, and assigning the shortest codes to the most common data. Such techniques include entropy encoding, run-length encoding, and compression using a dictionary. Using these techniques and others, an 8-bit character or a string of such characters could be represented with just a few bits resulting in a large amount of redundant data being removed. | ||

== History | == History == | ||

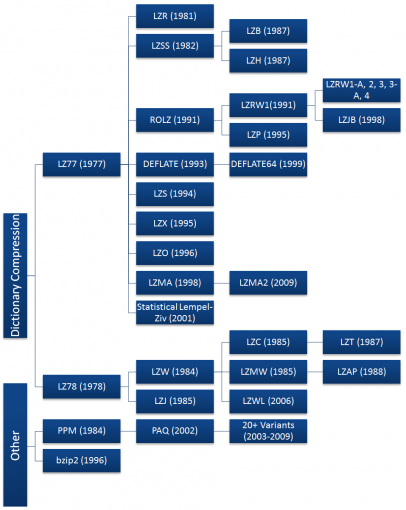

[[Image:Compression hierarchy.png|thumb|right|406x543px|A Hierarchy of Lossless Compression Algorithms]] | |||

Data compression has only played a significant role in computing since the 1970s, when the Internet was becoming more popular and the Lempel-Ziv algorithms were invented, but it has a much longer history outside of computing. Morse code, invented in 1838, is the earliest instance of data compression in that the most common letters in the English language such as “e” and “t” are given shorter Morse codes. Later, as mainframe computers were starting to take hold in 1949, Claude Shannon and Robert Fano invented Shannon-Fano coding. Their algorithm assigns codes to symbols in a given block of data based on the probability of the symbol occuring. The probability is of a symbol occuring is inversely proportional to the length of the code, resulting in a shorter way to represent the data. <ref name="refnum1">Wolfram, Stephen. A New Kind of Science. Champaign, IL: Wolfram Media, 2002. 1069. Print.</ref> | |||

Two years later, David Huffman was studying information theory at MIT and had a class with Robert Fano. Fano gave the class the choice of writing a term paper or taking a final exam. Huffman chose the term paper, which was to be on finding the most efficient method of binary coding. After working for months and failing to come up with anything, Huffman was about to throw away all his work and start studying for the final exam in lieu of the paper. It was at that point that he had an epiphany, figuring out a very similar yet more efficient technique to Shannon-Fano coding. The key difference between Shannon-Fano coding and Huffman coding is that in the former the probability tree is built bottom-up, creating a suboptimal result, and in the latter it is built top-down.<ref name="refnum2">Ken Huffman. Profile: David A. Huffman, Scientific American, September 1991, pp. 54–58.</ref> | |||

=== | Early implementations of Shannon-Fano and Huffman coding were done using hardware and hardcoded codes. It was not until the 1970s and the advent of the Internet and online storage that software compression was implemented that Huffman codes were dynamically generated based on the input data.<ref name="refnum1" /> Later, in 1977, Abraham Lempel and Jacob Ziv published their groundbreaking LZ77 algorithm, the first algorithm to use a dictionary to compress data. More specifically, LZ77 used a dynamic dictionary oftentimes called a sliding window.<ref name="refnum3">Ziv J., Lempel A., “A Universal Algorithm for Sequential Data Compression”, IEEE Transactions on Information Theory, Vol. 23, No. 3 (1977), pp. 337-343. </ref> In 1978, the same duo published their LZ78 algorithm which also uses a dictionary; unlike LZ77, this algorithm parses the input data and generates a static dictionary rather than generating it dynamically.<ref name="refnum4">Ziv J., Lempel A., “Compression of Individual Sequences via Variable-Rate Coding”, IEEE Transactions on Information Theory, Vol. 24, No. 5, pp. 530-536. </ref> | ||

=== Legal Issues === | |||

Both the LZ77 and LZ78 algorithms grew rapidly in popularity, spawning many variants shown in the diagram to the right. Most of these algorithms have died off since their invention, with just a handful seeing widespread use today including DEFLATE, LZMA, and LZX. Most of the commonly used algorithms are derived from the LZ77 algorithm. This is not due to technical superiority, but because LZ78 algorithms became patent-encumbered after Sperry patented the derivative LZW algorithm in 1984 and began suing software vendors, server admins, and even end users for using the GIF format without a license. <ref name="refnum5">USPTO Patent #4814746. See http://www.theregister.co.uk/1999/09/01/unisys_demands_5k_licence_fee</ref><ref name="refnum6">http://stephane.lesimple.fr/wiki/blog/lzop_vs_compress_vs_gzip_vs_bzip2_vs_lzma_vs_lzma2-xz_benchmark_reloaded</ref> | |||

At the time, the [[UNIX]] compress utility used a very slight modification of the LZW algorithm called LZC and was later discontinued due to patent issues. Other UNIX developers also began to deviate from using the LZW algorithm in favor or open source ones. This led the UNIX community to adopt the DEFLATE-based gzip and the Burrows-Wheeler Transform-based bzip2 formats. In the long run, this was a benefit for the UNIX community because both the gzip and bzip2 formats nearly always achieve significantly higher compression ratios than the LZW format.<ref name="refnum6" /> The patent issues surrounding LZW have since subsided, as the patent on the LZW algorithm expired in 2003.<ref name="refnum5" /> Despite this, the LZW algorithm has largely been replaced and is only commonly used in GIF compression. There have also been some LZW derivatives since then but they do not enjoy widespread use either and LZ77 algorithms remain dominant. | |||

=== The Rise of | Another legal battle erupted in 1993 regarding the LZS algorithm. LZS was developed by Stac Electronics for use in disk compression software such as Stacker. Microsoft used the LZS algorithm in developing disk compression software that was released with MS-DOS 6.0 that purported to double the capacity of a hard drive. When Stac Electronics found out its intellectual property was being used, it filed suit against Microsoft. Microsoft was later found guilty of patent infringement and ordered to pay Stac Electronics $120 million in damages minus $13.6 million awarded in a countersuit finding that Microsoft’s infringement was not willful.<ref name="refnum7">http://www.msversus.org/archive/stac.html</ref> Although ''Stac Electronics v. Microsoft'' had a large judgment, it did not impede the development of Lempel-Ziv algorithms as the LZW patent dispute did. The only consequence seems to be that LZS has not been forked into any new algorithms. | ||

=== The Rise of Deflate === | |||

Corporations and other large entities have used data compression since the Lempel-Ziv algorithms were published as they have ever-increasing storage needs and data compression allows them to meet those needs. However, data compression did not see widespread use until the Internet began to take off toward the late 1980s when a need for data compression emerged. Bandwidth was either limited, expensive, or both, and data compression helped to alleviate these bottlenecks. Compression became especially important when the World Wide Web was developed as people began to share more images and other formats which are considerably larger than text. To meet the demand, several new file formats were developed incorporating compression including ZIP, GIF, and PNG. | Corporations and other large entities have used data compression since the Lempel-Ziv algorithms were published as they have ever-increasing storage needs and data compression allows them to meet those needs. However, data compression did not see widespread use until the Internet began to take off toward the late 1980s when a need for data compression emerged. Bandwidth was either limited, expensive, or both, and data compression helped to alleviate these bottlenecks. Compression became especially important when the World Wide Web was developed as people began to share more images and other formats which are considerably larger than text. To meet the demand, several new file formats were developed incorporating compression including ZIP, GIF, and PNG. | ||

Thom Henderson released the first commercially successful archive format called ARC in 1985 through his company, System Enhancement Associates. ARC was especially popular in the BBS community, since it was one of the first programs capable of both bundling and compressing files and it was also made open source. The ARC format uses a modification to the LZW algorithm to compress data. A man named Phil Katz noticed ARC's popularity and decided to improve it by writing the compression and decompression routines in assembly language. He released his PKARC program as shareware in 1987 and was later sued by Henderson for copyright infringement. He was found guilty and forced to pay royalties and other penalties as part of a cross-licensing agreement. He was found guilty because PKARC was a blatant copy of ARC; in some instances even the typos in the comments were identical [ | Thom Henderson released the first commercially successful archive format called ARC in 1985 through his company, System Enhancement Associates. ARC was especially popular in the BBS community, since it was one of the first programs capable of both bundling and compressing files and it was also made open source. The ARC format uses a modification to the LZW algorithm to compress data. A man named Phil Katz noticed ARC's popularity and decided to improve it by writing the compression and decompression routines in assembly language. He released his PKARC program as shareware in 1987 and was later sued by Henderson for copyright infringement. He was found guilty and forced to pay royalties and other penalties as part of a cross-licensing agreement. He was found guilty because PKARC was a blatant copy of ARC; in some instances even the typos in the comments were identical. <ref name="refnum16">[http://www.esva.net/~thom/philkatz.html ARC Info] </ref> | ||

Phil Katz could no longer sell PKARC after 1988 due to the cross-licensing agreement, so in 1989 he created a tweaked version of PKARC that is now known as the ZIP format. As a result of its use of LZW, it was considered patent encumbered and Katz later chose to switch to the new IMPLODE algorithm. The format was again updated in 1993, when Katz released PKZIP 2.0, which implemented the DEFLATE algorithm as well as other features like split volumes. This version of the ZIP format is found ubiquitously today, as almost all .zip files follow the PKZIP 2.0 format despite its great age. | Phil Katz could no longer sell PKARC after 1988 due to the cross-licensing agreement, so in 1989 he created a tweaked version of PKARC that is now known as the ZIP format. As a result of its use of LZW, it was considered patent encumbered and Katz later chose to switch to the new IMPLODE algorithm. The format was again updated in 1993, when Katz released PKZIP 2.0, which implemented the DEFLATE algorithm as well as other features like split volumes. This version of the ZIP format is found ubiquitously today, as almost all .zip files follow the PKZIP 2.0 format despite its great age. | ||

The GIF, or Graphics Interchange Format, was developed by CompuServe in 1987 to allow bitmaps to be shared without data loss (although the format is limited to 256 colors per frame), while substantially reducing the file size to allow transmission over | The GIF, or Graphics Interchange Format, was developed by CompuServe in 1987 to allow bitmaps to be shared without data loss (although the format is limited to 256 colors per frame), while substantially reducing the file size to allow transmission over dialup modems. However, like the ZIP format, GIF is also based on the LZW algorithm. Despite being patent encumbered, Unisys was unable to enforce their patents adequately enough to stop the format from spreading. Even now, over 20 years later, the GIF remains in use especially for its capability of being animated. <ref name="refnum13">http://www.faqs.org/faqs/compression-faq/part1/section-7.html</ref> | ||

Although GIF could not be stopped, CompuServe sought a format unencumbered by patents and in 1994 introduced the Portable Network Graphics (PNG) format. Like ZIP, the PNG standard uses the DEFLATE algorithm to perform compression. Although DEFLATE was patented by Katz | Although GIF could not be stopped, CompuServe sought a format unencumbered by patents and in 1994 introduced the Portable Network Graphics (PNG) format. Like ZIP, the PNG standard uses the DEFLATE algorithm to perform compression. Although DEFLATE was patented by Katz<ref name="refnum14">USPTO Patent #5051745 </ref> the patent was never enforced and thus PNG and other DEFLATE-based formats avoid infringing on patents. Although LZW took off in the early days of compression, due to Unisys's litigious nature it has more or less died off in favor of the faster and more efficient DEFLATE algorithm. DEFLATE is currently the most used data compression algorithm since it is a bit like the Swiss Army knife of compression. | ||

Beyond its use in the PNG and ZIP formats, DEFLATE is also used very frequently elsewhere in computing. For example, the gzip (.gz) file format uses DEFLATE since it is essentially an open source version of ZIP. Other uses of DEFLATE include HTTP, SSL, and other technologies designed for efficient data compression over a network. | Beyond its use in the PNG and ZIP formats, DEFLATE is also used very frequently elsewhere in computing. For example, the gzip (.gz) file format uses DEFLATE since it is essentially an open source version of ZIP. Other uses of DEFLATE include HTTP, SSL, and other technologies designed for efficient data compression over a network. | ||

Sadly, Phil Katz did not live long enough to see his DEFLATE algorithm take over the computing world. He suffered from alcoholism for several years and his life began to fall apart in the late 1990s, having been arrested several times for drunk driving and other violations. Katz was found dead in a hotel room on April 14, 2000, at the age of 37. The cause of death was found to be acute pancreatic bleeding from his alcoholism, brought on by the many empty bottles of liquor found near his body [ | Sadly, Phil Katz did not live long enough to see his DEFLATE algorithm take over the computing world. He suffered from alcoholism for several years and his life began to fall apart in the late 1990s, having been arrested several times for drunk driving and other violations. Katz was found dead in a hotel room on April 14, 2000, at the age of 37. The cause of death was found to be acute pancreatic bleeding from his alcoholism, brought on by the many empty bottles of liquor found near his body. <ref name="refnum15">[http://www.bbsdocumentary.com/library/CONTROVERSY/LAWSUITS/SEA/katzbio.txt Phil Katz' Death] </ref> | ||

=== Current Archival Software | === Current Archival Software === | ||

The ZIP format and other DEFLATE-based formats were king up until the mid 1990s when new and improved formats began to emerge. In 1993, Eugene Roshal released his archiver known as WinRAR which utilizes the proprietary RAR format. The latest version of RAR uses a combination of the PPM and LZSS algorithms, but not much is known about earlier implementations. RAR has become a standard format for sharing files over the Internet, specifically in the distribution of pirated media. An open-source implementation of the Burrows-Wheeler Transform called bzip2 was introduced in 1996 and rapidly grew in popularity on the UNIX platform against the DEFLATE-based gzip format. Another open-source compression program was released in 1999 as the 7-Zip or .7z format. 7-Zip could be the first format to challenge the dominance of ZIP and RAR due to its generally high compression ratio and the format's modularity and openness. This format is not limited to using one compression algorithm, but can instead choose between bzip2, LZMA, LZMA2, and PPMd algorithms among others. Finally, on the bleeding edge of archival software are the PAQ* formats. The first PAQ format was released by Matt Mahoney in 2002, called PAQ1. PAQ | The ZIP format and other DEFLATE-based formats were king up until the mid 1990s when new and improved formats began to emerge. In 1993, Eugene Roshal released his archiver known as WinRAR which utilizes the proprietary RAR format. The latest version of RAR uses a combination of the PPM and LZSS algorithms, but not much is known about earlier implementations. RAR has become a standard format for sharing files over the Internet, specifically in the distribution of pirated media. An open-source implementation of the Burrows-Wheeler Transform called bzip2 was introduced in 1996 and rapidly grew in popularity on the UNIX platform against the DEFLATE-based gzip format. Another open-source compression program was released in 1999 as the 7-Zip or .7z format. 7-Zip could be the first format to challenge the dominance of ZIP and RAR due to its generally high compression ratio and the format's modularity and openness. This format is not limited to using one compression algorithm, but can instead choose between bzip2, LZMA, LZMA2, and PPMd algorithms among others. Finally, on the bleeding edge of archival software are the PAQ* formats. The first PAQ format was released by Matt Mahoney in 2002, called PAQ1. PAQ substantially improves on the PPM algorithm by using a technique known as context mixing which combines two or more statistical models to generate a better prediction of the next symbol than either of the models on their own. | ||

== Future Developments == | == Future Developments == | ||

The future is never certain, but based on current trends some predictions can be made as to what may happen in the future of data compression. Context Mixing algorithms such as PAQ and its variants have started to attract popularity, and they tend to achieve the highest compression ratios but are usually slow. With the exponential increase in hardware speed following Moore's Law, context mixing algorithms will likely flourish as the speed penalties are overcome through faster hardware due to their high compression ratio. The algorithm that PAQ sought to improve, called Prediction by Partial Matching (PPM) may also see new variants. Finally, the Lempel-Ziv Markov chain Algorithm (LZMA) has consistently shown itself to have an excellent compromise between speed and high compression ratio and will likely spawn more variants in the future. LZMA may even be the "winner" as it is further developed, having already been adopted in numerous competing compression formats since it was introduced with the 7-Zip format. Another potential development is the use of compression via substring enumeration (CSE) which is an up-and-coming compression technique that has not seen many software implementations yet. In its naive form it performs similarly to bzip2 and PPM, and researchers have been working to improve its efficiency | The future is never certain, but based on current trends some predictions can be made as to what may happen in the future of data compression. Context Mixing algorithms such as PAQ and its variants have started to attract popularity, and they tend to achieve the highest compression ratios but are usually slow. With the exponential increase in hardware speed following Moore's Law, context mixing algorithms will likely flourish as the speed penalties are overcome through faster hardware due to their high compression ratio. The algorithm that PAQ sought to improve, called Prediction by Partial Matching (PPM) may also see new variants. Finally, the Lempel-Ziv Markov chain Algorithm (LZMA) has consistently shown itself to have an excellent compromise between speed and high compression ratio and will likely spawn more variants in the future. LZMA may even be the "winner" as it is further developed, having already been adopted in numerous competing compression formats since it was introduced with the 7-Zip format. Another potential development is the use of compression via substring enumeration (CSE) which is an up-and-coming compression technique that has not seen many software implementations yet. In its naive form it performs similarly to bzip2 and PPM, and researchers have been working to improve its efficiency.<ref name="refnum20">Iwata, K., Arimura, M., and Shima, Y., "An Improvement in Lossless Data Compression via Substring Enumeration", , 2011 IEEE/ACIS 10th International Conference on Computer and Information Science (ICIS).</ref> | ||

== Compression Techniques | == Compression Techniques == | ||

Many different techniques are used to compress data. Most compression techniques cannot stand on their own, but must be combined together to form a compression algorithm. Those that can stand alone are often more effective when joined together with other compression techniques. Most of these techniques fall under the category of entropy coders, but there are others such as Run-Length Encoding and the Burrows-Wheeler Transform that are also commonly used. | Many different techniques are used to compress data. Most compression techniques cannot stand on their own, but must be combined together to form a compression algorithm. Those that can stand alone are often more effective when joined together with other compression techniques. Most of these techniques fall under the category of entropy coders, but there are others such as Run-Length Encoding and the Burrows-Wheeler Transform that are also commonly used. | ||

=== Run-Length Encoding === | === Run-Length Encoding === | ||

| Line 57: | Line 59: | ||

Input: AAABBCCCCDEEEEEEAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA | Input: AAABBCCCCDEEEEEEAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA | ||

Output: 3A2B4C1D6E38A | Output: 3A2B4C1D6E38A | ||

=== Burrows-Wheeler Transform | === Burrows-Wheeler Transform === | ||

The Burrows-Wheeler Transform is a compression technique that aims to reversibly transform a block of input data such that the amount of runs of identical characters is maximized. The BWT itself does not perform any compression operations, it simply transforms the input such that it can be more efficiently coded by a Run-Length Encoder or other secondary compression technique. | The Burrows-Wheeler Transform is a compression technique invented in 1994 that aims to reversibly transform a block of input data such that the amount of runs of identical characters is maximized. The BWT itself does not perform any compression operations, it simply transforms the input such that it can be more efficiently coded by a Run-Length Encoder or other secondary compression technique. | ||

The algorithm for a BWT is simple: | The algorithm for a BWT is simple: | ||

| Line 68: | Line 70: | ||

#Generate all possible rotations of the input string, storing each in the array. | #Generate all possible rotations of the input string, storing each in the array. | ||

#Sort the array alphabetically. | #Sort the array alphabetically. | ||

#Return the last column of the array | #Return the last column of the array.<ref name="refnum29">Burrows M., and Wheeler, D. J. 1994. A Block-Sorting Lossless Data Compression Algorithm. SRC Research Report 124, Digital Systems Research Center.</ref> | ||

BWT usually works best on long inputs with many alternating identical characters. Here is an example of the algorithm being run on an ideal input. Note that & is an End of File character: | BWT usually works best on long inputs with many alternating identical characters. Here is an example of the algorithm being run on an ideal input. Note that & is an End of File character: | ||

| Line 103: | Line 105: | ||

|} | |} | ||

Because of its alternating identical characters, performing the BWT on this input generates an optimal result that another algorithm could further compress, such as RLE which would yield "3H&3A". While this example generated an optimal result, it does not generate optimal results on real-world data. | Because of its alternating identical characters, performing the BWT on this input generates an optimal result that another algorithm could further compress, such as RLE which would yield "3H&3A". While this example generated an optimal result, it does not generate optimal results on most real-world data. | ||

=== Entropy Encoding | === Entropy Encoding === | ||

Entropy in data compression means the smallest number of bits needed, on average, to represent a symbol or literal. A basic entropy coder combines a statistical model and a coder. The input file is parsed and used to generate a statistical model that consists of the probabilities of a given symbol appearing. Then, the coder will use the statistical model to determine what bit-or-bytecodes to assign to each symbol such that the most common symbols have the shortest codes and the least common symbols have the longest codes | Entropy in data compression means the smallest number of bits needed, on average, to represent a symbol or literal. A basic entropy coder combines a statistical model and a coder. The input file is parsed and used to generate a statistical model that consists of the probabilities of a given symbol appearing. Then, the coder will use the statistical model to determine what bit-or-bytecodes to assign to each symbol such that the most common symbols have the shortest codes and the least common symbols have the longest codes.<ref name="refnum8">http://www.cs.tau.ac.il/~dcor/Graphics/adv-slides/entropy.pdf</ref> | ||

==== Shannon-Fano Coding | ==== Shannon-Fano Coding ==== | ||

This is one of the earliest compression techniques, invented in 1949 by Claude Shannon and Robert Fano. This technique involves generating a binary tree to represent the probabilities of each symbol occurring. The symbols are ordered such that the most frequent symbols appear at the top of the tree and the least likely symbols appear at the bottom. | This is one of the earliest compression techniques, invented in 1949 by Claude Shannon and Robert Fano. This technique involves generating a binary tree to represent the probabilities of each symbol occurring. The symbols are ordered such that the most frequent symbols appear at the top of the tree and the least likely symbols appear at the bottom. | ||

The code for a given symbol is obtained by searching for it in the Shannon-Fano tree, and appending to the code a value of 0 or 1 for each left or right branch taken, respectively. For example, if “A” is two branches to the left and one to the right its code would be | The code for a given symbol is obtained by searching for it in the Shannon-Fano tree, and appending to the code a value of 0 or 1 for each left or right branch taken, respectively. For example, if “A” is two branches to the left and one to the right its code would be “001<sub>2</sub>”. Shannon-Fano coding does not always produce optimal codes due to the way it builds the binary tree from the bottom up. For this reason, Huffman coding is used instead as it generates an optimal code for any given input. | ||

The algorithm to generate Shannon-Fano codes is fairly simple | The algorithm to generate Shannon-Fano codes is fairly simple | ||

| Line 123: | Line 125: | ||

#Divide the list in two while keeping the probability of the left branch roughly equal to those on the right branch. | #Divide the list in two while keeping the probability of the left branch roughly equal to those on the right branch. | ||

#Prepend 0 and 1 to the left and right nodes' codes, respectively. | #Prepend 0 and 1 to the left and right nodes' codes, respectively. | ||

#Recursively apply steps 5 and 6 to the left and right subtrees until each node is a leaf in the tree. | #Recursively apply steps 5 and 6 to the left and right subtrees until each node is a leaf in the tree.<ref name="refnum9">Shannon, C.E. (July 1948). "A Mathematical Theory of Communication". Bell System Technical Journal 27: 379–423. </ref> | ||

==== Huffman Coding | ==== Huffman Coding ==== | ||

Huffman Coding is another variant of entropy coding that works in a very similar manner to Shannon-Fano Coding, but the binary tree is built from the top down to generate an optimal result. | Huffman Coding is another variant of entropy coding that works in a very similar manner to Shannon-Fano Coding, but the binary tree is built from the top down to generate an optimal result. | ||

| Line 137: | Line 139: | ||

#While (Nodes in Queue > 1) | #While (Nodes in Queue > 1) | ||

*Remove the two lowest probability nodes from the queue. | *Remove the two lowest probability nodes from the queue. | ||

*Prepend 0 and 1 to the left and right nodes' codes, respectively. | *Prepend 0 and 1 to the left and right nodes' codes, respectively. | ||

*Create a new node with value equal to the sum of the nodes’ probability. | *Create a new node with value equal to the sum of the nodes’ probability. | ||

*Assign the first node to the left branch and the second node to the right branch. | *Assign the first node to the left branch and the second node to the right branch. | ||

*Add the node to the queue | *Add the node to the queue | ||

6. The last node remaining in the queue is the root of the Huffman tree. | 6. The last node remaining in the queue is the root of the Huffman tree.<ref name="refnum10">HUFFMAN, D. A. 1952. A method for the construction of minimum-redundancy codes. In Proceedings of the Institute of Electrical and Radio Engineers 40, 9 (Sept.), pp. 1098-1101. </ref> | ||

==== Arithmetic Coding | ==== Arithmetic Coding ==== | ||

Arithmetic coding is arguably the most optimal entropy coding technique if the objective is the best compression ratio since it usually achieves better results than Huffman Coding. It is, however, quite complicated compared to the other coding techniques. | This method was developed in 1979 at IBM, which was investigating data compression techniques for use in their mainframes. Arithmetic coding is arguably the most optimal entropy coding technique if the objective is the best compression ratio since it usually achieves better results than Huffman Coding. It is, however, quite complicated compared to the other coding techniques. | ||

Rather than splitting the probabilities of symbols into a tree, arithmetic coding transforms the input data into a single rational number between 0 and 1 by changing the base and assigning a single value to each unique symbol from 0 up to the base. Then, it is further transformed into a fixed-point binary number which is the encoded result. The value can be decoded into the original output by changing the base from binary back to the original base and replacing the values with the symbols they correspond to. | Rather than splitting the probabilities of symbols into a tree, arithmetic coding transforms the input data into a single rational number between 0 and 1 by changing the base and assigning a single value to each unique symbol from 0 up to the base. Then, it is further transformed into a fixed-point binary number which is the encoded result. The value can be decoded into the original output by changing the base from binary back to the original base and replacing the values with the symbols they correspond to. | ||

A general algorithm to compute the arithmetic code is: | A general algorithm to compute the arithmetic code is: | ||

#Calculate the number of unique symbols in the input. This number represents the base b (e.g. base 2 is binary) of the arithmetic code. | #Calculate the number of unique symbols in the input. This number represents the base b (e.g. base 2 is binary) of the arithmetic code. | ||

| Line 157: | Line 159: | ||

#Using the values from step 2, replace the symbols in the input with their codes | #Using the values from step 2, replace the symbols in the input with their codes | ||

#Convert the result from step 3 from base b to a sufficiently long fixed-point binary number to preserve precision. | #Convert the result from step 3 from base b to a sufficiently long fixed-point binary number to preserve precision. | ||

#Record the length of the input string somewhere in the result as it is needed for decoding | #Record the length of the input string somewhere in the result as it is needed for decoding.<ref name="refnum11">RISSANEN, J., AND LANGDON, G. G. 1979. Arithmetic coding. IBM J. Res. Dev. 23, 2 (Mar.), 149-162. </ref> | ||

Here is an example of an encode operation, given the input “ABCDAABD”: | Here is an example of an encode operation, given the input “ABCDAABD”: | ||

#Found 4 unique symbols in input, therefore base = 4. Length = 8 | #Found 4 unique symbols in input, therefore base = 4. Length = 8 | ||

| Line 165: | Line 167: | ||

#Replaced input with codes: “0.01230013<sub>4</sub>” where the leading 0 is not a symbol. | #Replaced input with codes: “0.01230013<sub>4</sub>” where the leading 0 is not a symbol. | ||

#Convert “0.01231123<sub>4</sub>” from base 4 to base 2: “0.01101100000111<sub>2</sub>” | #Convert “0.01231123<sub>4</sub>” from base 4 to base 2: “0.01101100000111<sub>2</sub>” | ||

#Result found. Note in result that input length is 8. | #Result found. Note in result that input length is 8. | ||

Assuming | Assuming 8-bit characters, the input is 64 bits long, while its arithmetic coding is just 15 bits long resulting in an excellent compression ratio of 24%. This example demonstrates how arithmetic coding compresses well when given a limited character set. | ||

== Compression Algorithms | == Compression Algorithms == | ||

=== Sliding Window Algorithms | === Sliding Window Algorithms === | ||

==== LZ77 | ==== LZ77 ==== | ||

Published in 1977, LZ77 is the algorithm that started it all. It introduced the concept of a 'sliding window' for the first time which brought about significant improvements in compression ratio over more primitive algorithms. | Published in 1977, LZ77 is the algorithm that started it all. It introduced the concept of a 'sliding window' for the first time which brought about significant improvements in compression ratio over more primitive algorithms. LZ77 maintains a dictionary using triples representing offset, run length, and a deviating character. The offset is how far from the start of the file a given phrase starts at, and the run length is how many characters past the offset are part of the phrase. The deviating character is just an indication that a new phrase was found, and that phrase is equal to the phrase from offset to offset+length plus the deviating character. The dictionary used changes dynamically based on the sliding window as the file is parsed. For example, the sliding window could be 64MB which means that the dictionary will contain entries for the past 64MB of the input data. | ||

Given an input "abbadabba" the output would look something like "abb(0,1,'d')(0,3,'a')" as in the example below:<br> | Given an input "abbadabba" the output would look something like "abb(0,1,'d')(0,3,'a')" as in the example below:<br> | ||

| Line 218: | Line 220: | ||

|} | |} | ||

While this substitution is slightly larger than the input, it generally achieves a considerably smaller result given longer input data. <ref name="refnum3" /> | |||

==== LZR | ==== LZR ==== | ||

LZR is a modification of LZ77 invented by Michael Rodeh in 1981. The algorithm aims to be a linear time alternative to LZ77. However, the encoded pointers can point to any offset in the file which means LZR consumes a considerable amount of memory. Combined with its poor compression ratio (LZ77 is often superior) it is an unfeasible variant | LZR is a modification of LZ77 invented by Michael Rodeh in 1981. The algorithm aims to be a linear time alternative to LZ77. However, the encoded pointers can point to any offset in the file which means LZR consumes a considerable amount of memory. Combined with its poor compression ratio (LZ77 is often superior) it is an unfeasible variant.<ref name="refnum18">RODEH, M., PRATT, V. R., AND EVEN, S. 1981. Linear algorithm for data compression via string matching. J. ACM 28, 1 (Jan.), 16-24. </ref><ref name="refnum12">Bell, T., Witten, I., Cleary, J., "Modeling for Text Compression", ACM Computing Surveys, Vol. 21, No. 4 (1989). </ref> | ||

==== DEFLATE | ==== DEFLATE ==== | ||

DEFLATE was invented by Phil Katz in 1993 and is the basis for the majority of compression tasks today. It simply combines an LZ77 or LZSS preprocessor with Huffman coding on the backend to achieve moderately compressed results in a short time. | DEFLATE was invented by Phil Katz in 1993 and is the basis for the majority of compression tasks today. It simply combines an LZ77 or LZSS preprocessor with Huffman coding on the backend to achieve moderately compressed results in a short time. | ||

==== DEFLATE64 | ==== DEFLATE64 ==== | ||

DEFLATE64 is a proprietary extension of the DEFLATE algorithm which increases the dictionary size to 64kB (hence the name) and allows greater distance in the sliding window. It increases both performance and compression ratio compared to DEFLATE [ | DEFLATE64 is a proprietary extension of the DEFLATE algorithm which increases the dictionary size to 64kB (hence the name) and allows greater distance in the sliding window. It increases both performance and compression ratio compared to DEFLATE.<ref name="refnum19">[http://www.binaryessence.com/dct/apc/en000263.htm DEFLATE64 benchmarks] </ref> However, the proprietary nature of DEFLATE64 and its modest improvements over DEFLATE has led to limited adoption of the format. Open source algorithms such as LZMA are generally used instead. | ||

==== LZSS | ==== LZSS ==== | ||

The LZSS, or Lempel-Ziv-Storer-Szymanski algorithm was first published in 1982 by James Storer and Thomas Szymanski. LZSS improves on LZ77 in that it can detect whether a substitution will decrease the filesize or not. If no size reduction will be achieved, the input is left as a literal in the output. Otherwise, the section of the input is replaced with an (offset, length) pair where the offset is how many bytes from the start of the input and the length is how many characters to read from that position | The LZSS, or Lempel-Ziv-Storer-Szymanski algorithm was first published in 1982 by James Storer and Thomas Szymanski. LZSS improves on LZ77 in that it can detect whether a substitution will decrease the filesize or not. If no size reduction will be achieved, the input is left as a literal in the output. Otherwise, the section of the input is replaced with an (offset, length) pair where the offset is how many bytes from the start of the input and the length is how many characters to read from that position.<ref name="refnum21">STORER, J. A., AND SZYMANSKI, T. G. 1982. Data compression via textual substitution. J. ACM 29, 4 (Oct.), 928-951. </ref> Another improvement over LZ77 comes from the elimination of the "next character" and uses just an offset-length pair. | ||

Here is a brief example given the input " these theses" which yields " these(0,6)s" which saves just one byte, but saves considerably more on larger inputs. | Here is a brief example given the input " these theses" which yields " these(0,6)s" which saves just one byte, but saves considerably more on larger inputs. | ||

{| cellspacing="1" cellpadding="1" border="1" style="width: 581px; height: 100px;" | {| cellspacing="1" cellpadding="1" border="1" style="width: 581px; height: 100px;" | ||

| Line 286: | Line 288: | ||

|} | |} | ||

LZSS is still used in many popular archive formats, the best known of which is RAR. It is also sometimes used for network data compression. | LZSS is still used in many popular archive formats, the best known of which is RAR. It is also sometimes used for network data compression. | ||

==== LZH | ==== LZH ==== | ||

LZH was developed in 1987 and it stands for "Lempel-Ziv Huffman." It is a variant of LZSS that utilizes Huffman coding to compress the pointers, resulting in slightly better compression. However, the improvements gained using Huffman coding are negligible and the compression is not worth the performance hit of using Huffman codes | LZH was developed in 1987 and it stands for "Lempel-Ziv Huffman." It is a variant of LZSS that utilizes Huffman coding to compress the pointers, resulting in slightly better compression. However, the improvements gained using Huffman coding are negligible and the compression is not worth the performance hit of using Huffman codes.<ref name="refnum12" /> | ||

==== LZB | ==== LZB ==== | ||

LZB was also developed in 1987 by Timothy Bell et al as a variant of LZSS. Like LZH, LZB also aims to reduce the compressed file size by encoding the LZSS pointers more efficiently. The way it does this is by gradually increasing the size of the pointers as the sliding window grows larger. It can achieve higher compression than LZSS and LZH, but it is still rather slow compared to LZSS due to the extra encoding step for the pointers | LZB was also developed in 1987 by Timothy Bell et al as a variant of LZSS. Like LZH, LZB also aims to reduce the compressed file size by encoding the LZSS pointers more efficiently. The way it does this is by gradually increasing the size of the pointers as the sliding window grows larger. It can achieve higher compression than LZSS and LZH, but it is still rather slow compared to LZSS due to the extra encoding step for the pointers.<ref name="refnum12" /> | ||

==== ROLZ | ==== ROLZ ==== | ||

ROLZ stands for "Reduced Offset Lempel-Ziv" and its goal is to improve LZ77 compression by restricting the offset length to reduce the amount of data required to encode the offset-length pair. This derivative of LZ77 was first seen in 1991 in Ross Williams' LZRW4 algorithm. Other implementations include BALZ, QUAD, and RZM. Highly optimized ROLZ can achieve nearly the same compression ratios as LZMA; however, ROLZ suffers from a lack of popularity. | ROLZ stands for "Reduced Offset Lempel-Ziv" and its goal is to improve LZ77 compression by restricting the offset length to reduce the amount of data required to encode the offset-length pair. This derivative of LZ77 was first seen in 1991 in Ross Williams' LZRW4 algorithm. Other implementations include BALZ, QUAD, and RZM. Highly optimized ROLZ can achieve nearly the same compression ratios as LZMA; however, ROLZ suffers from a lack of popularity. | ||

==== LZP | ==== LZP ==== | ||

LZP stands for "Lempel-Ziv + Prediction." It is a special case of ROLZ algorithm where the offset is reduced to 1. There are several variations using different techniques to achieve either faster operation of better compression ratios. LZW4 implements an arithmetic encoder to achieve the best compression ratio at the cost of speed | LZP stands for "Lempel-Ziv + Prediction." It is a special case of ROLZ algorithm where the offset is reduced to 1. There are several variations using different techniques to achieve either faster operation of better compression ratios. LZW4 implements an arithmetic encoder to achieve the best compression ratio at the cost of speed. <ref name="refnum17">Bloom, C., "LZP: a new data compression algorithm", Data Compression Conference, 1996. DCC '96. Proceedings, p. 425 10.1109/DCC.1996.488353. </ref> | ||

==== LZRW1 | ==== LZRW1 ==== | ||

Ron Williams created this algorithm in 1991, introducing the concept of a Reduced-Offset Lempel-Ziv compression for the first time. LZRW1 can achieve high compression ratios while remaining very fast and efficient. Ron Williams also created several variants that improve on LZRW1 such asa LZRW1-A, 2, 3, 3-A, and 4 [ | Ron Williams created this algorithm in 1991, introducing the concept of a Reduced-Offset Lempel-Ziv compression for the first time. LZRW1 can achieve high compression ratios while remaining very fast and efficient. Ron Williams also created several variants that improve on LZRW1 such asa LZRW1-A, 2, 3, 3-A, and 4.<ref name="refnum22">[http://www.ross.net/compression/ http://www.ross.net/compression/] </ref> | ||

==== LZJB | ==== LZJB ==== | ||

Jeff Bonwick created his Lempel-Ziv Jeff Bonwick algorithm in 1998 for use in the Solaris Z File System (ZFS). It is considered a variant of the LZRW algorithm, specifically the LZRW1 variant which is aimed at maximum compression speed. Since it is used in a file system, speed is especially important to ensure that disk operations are not bottlenecked by the compression algorithm. | Jeff Bonwick created his Lempel-Ziv Jeff Bonwick algorithm in 1998 for use in the Solaris Z File System (ZFS). It is considered a variant of the LZRW algorithm, specifically the LZRW1 variant which is aimed at maximum compression speed. Since it is used in a file system, speed is especially important to ensure that disk operations are not bottlenecked by the compression algorithm. | ||

==== LZS ==== | ==== LZS ==== | ||

The Lempel-Ziv-Stac algorithm was developed by Stac Electronics in 1994 for use in disk compression software. It is a modification to LZ77 which distinguishes between literal symbols in the output and offset-length pairs, in addition to removing the next encountered symbol. The LZS algorithm is functionally most similar to the LZSS algorithm | The Lempel-Ziv-Stac algorithm was developed by Stac Electronics in 1994 for use in disk compression software. It is a modification to LZ77 which distinguishes between literal symbols in the output and offset-length pairs, in addition to removing the next encountered symbol. The LZS algorithm is functionally most similar to the LZSS algorithm.<ref name="refnum23">"Data Compression Method - Adaptive Coding witih Sliding Window for Information Interchange", American National Standard for Information Systems, August 30, 1994.</ref> | ||

==== LZX | ==== LZX ==== | ||

The LZX algorithm was developed in 1995 by Jonathan Forbes and Tomi Poutanen for the Amiga computer. The X in LZX has no special meaning. Forbes sold the algorithm to Microsoft in 1996 and went to work for them, where it was further improved upon for use in Microsoft's cabinet (.CAB) format. This algorithm is also employed by Microsoft to compress Compressed HTML Help (CHM) files, Windows Imaging Format (WIM) files, and Xbox Live Avatars [ | The LZX algorithm was developed in 1995 by Jonathan Forbes and Tomi Poutanen for the Amiga computer. The X in LZX has no special meaning. Forbes sold the algorithm to Microsoft in 1996 and went to work for them, where it was further improved upon for use in Microsoft's cabinet (.CAB) format. This algorithm is also employed by Microsoft to compress Compressed HTML Help (CHM) files, Windows Imaging Format (WIM) files, and Xbox Live Avatars.<ref name="refnum24">[http://www.linkedin.com/pub/jonathan-forbes/3/70a/a4b LZX Sold to Microsoft]</ref> | ||

==== LZO | ==== LZO ==== | ||

LZO was developed by Markus Oberhumer in | LZO was developed by Markus Oberhumer in 1996 whose development goal was fast compression and decompression. It allows for adjustable compression levels and requires only 64kB of additional memory for the highest compression level, while decompression only requires the input and output buffers. LZO functions very similarly to the LZSS algorithm but is optimized for speed rather than compression ratio. <ref name="refnum25">[http://www.oberhumer.com/opensource/lzo/ LZO Info]</ref> | ||

==== LZMA ==== | |||

= | The Lempel-Ziv Markov chain Algorithm was first published in 1998 with the release of the 7-Zip archiver for use in the .7z file format. It achieves better compression than bzip2, DEFLATE, and other algorithms in most cases. LZMA uses a chain of compression techniques to achieve its output. First, a modified LZ77 algorithm, which operates at a bitwise level rather than the traditional bytewise level, is used to parse the data. Then, the output of the LZ77 algorithm undergoes arithmetic coding. More techniques can be applied depending on the specific LZMA implementation. The result is considerably improved compression ratios over most other LZ variants mainly due to the bitwise method of compression rather than bytewise.<ref name="refnum26">[https://secure.wikimedia.org/wikipedia/en/wiki/Lempel–Ziv–Markov_chain_algorithm LZMA] Accessed on 12/10/2011.</ref> | ||

==== LZMA2 ==== | |||

= | LZMA2 is an incremental improvement to the original LZMA algorithm, first introduced in 2009<ref name="refnum27">[http://www.7-zip.org/history.txt LZMA2 Release Date]</ref> in an update to the 7-Zip archive software. LZMA2 improves the multithreading capabilities and thus performance of the LZMA algorithm, as well as better handling of incompressible data resulting in slightly better compression. | ||

==== Statistical Lempel-Ziv ==== | |||

= | Statistical Lempel-Ziv was a concept created by Dr. Sam Kwong and Yu Fan Ho in 2001. The basic principle it operates on is that a statistical analysis of the data can be combined with an LZ77-variant algorithm to further optimize what codes are stored in the dictionary. <ref name="refnum28">Kwong, S., Ho, Y.F., "A Statistical Lempel-Ziv Compression Algorithm for Personal Digital Assistant (PDA)", IEEE Transactions on Consumer Electronics, Vol. 47, No. 1, February 2001, pp 154-162.</ref> | ||

=== Dictionary Algorithms | === Dictionary Algorithms === | ||

==== LZ78 ==== | ==== LZ78 ==== | ||

LZ78 was created by Lempel and Ziv in 1978, hence the abbreviation. Rather than using a sliding window to generate the dictionary, the input data is either preprocessed to generate a dictionary wiith infinite scope of the input, or the dictionary is formed as the file is parsed. LZ78 employs the latter tactic. The dictionary size is usually limited to a few megabytes, or all codes up to a certain numbers of bytes such as 8; this is done to reduce memory requirements. How the algorithm handles the dictionary being full is what sets most LZ78 type algorithms apart.<ref name="refnum4" /> | |||

While parsing the file, the LZ78 algorithm adds each newly encountered character or string of characters to the dictionary. For each synbol in the input, a dictionary entry in the form (dictionary index, unknown symbol) is generated; if a symbol is already in the dictionary then the dictionary will be searched for substrings of the current symbol and the symbols following it. The index of the longest substring match is used for the dictionary index. The data pointed to by the dictionary index is added to the last character of the unknown substring. If the current symbol is unknown, then the dictionary index is set to 0 to indicate that it is a single character entry. The entries form a linked-list type data structure.<ref name="refnum12" /> | |||

An input such as "abbadabbaabaad" would generate the output "(0,a)(0,b)(2,a)(0,d)(1,b)(3,a)(6,d)". You can see how this was derived in the following example: | |||

{| cellspacing="1" cellpadding="1" border="1" style="width: 504px; height: 95px;" | |||

|- | |||

| Input:<br> | |||

| bgcolor="#666666" | <br> | |||

| a<br> | |||

| b<br> | |||

| ba<br> | |||

| d<br> | |||

| ab<br> | |||

| baa<br> | |||

| baad<br> | |||

|- | |||

| Dictionary Index<br> | |||

| 0 | |||

| 1<br> | |||

| 2<br> | |||

| 3<br> | |||

| 4<br> | |||

| 5<br> | |||

| 6<br> | |||

| 7<br> | |||

|- | |||

| Output<br> | |||

| NULL<br> | |||

| (0,a)<br> | |||

| (0,b)<br> | |||

| (2,a)<br> | |||

| (0,d)<br> | |||

| (1,b)<br> | |||

| (3,a) | |||

| (6,d)<br> | |||

|} | |||

==== LZW ==== | ==== LZW ==== | ||

LZW is the Lempel-Ziv-Welch algorithm created in 1984 by Terry Welch. It is the most commonly used derivative of the LZ78 family, despite being heavily patent-encumbered. LZW improves on LZ78 in a similar way to LZSS; it removes redundant characters in the output and makes the output entirely out of pointers. It also includes every character in the dictionary before starting compression, and employs other tricks to improve compression such as encoding the last character of every new phrase as the first character of the next phrase. LZW is commonly found in the Graphics Interchange Format, as well as in the early specificiations of the ZIP format and other specialized applications. LZW is very fast, but achieves poor compression compared to most newer algorithms and some algorithms are both faster and achieve better compression. <ref name="refnum12" /> | |||

==== LZC ==== | |||

LZC, or Lempel-Ziv Compress is a slight modification to the LZW algorithm used in the UNIX compress utility. The main difference between LZC and LZW is that LZC monitors the compression ratio of the output. Once the ratio crosses a certain threshold, the dictionary is discarded and rebuilt. <ref name="refnum12" /> | |||

==== LZT ==== | |||

Lempel-Ziv Tischer is a modification of LZC that, when the dictionary is full, deletes the least recently used phrase and replaces it with a new entry. There are some other incremental improvements, but neither LZC nor LZT is commonly used today. <ref name="refnum12" /> | |||

==== LZMW ==== | ==== LZMW ==== | ||

Invented in 1984 by Victor Miller and Mark Wegman, the LZMW algorithm is quite similar to LZT in that it employs the least recently used phrase substitution strategy. However, rather than joining together similar entries in the dictionary, LZMW joins together the last two phrases encoded and stores the result as a new entry. As a result, the size of the dictionary can expand quite rapidly and LRUs must be discarded more frequently. LZMW generally achieves better compression than LZT, however it is yet another algorithm that does not see much modern use. <ref name="refnum12" /> | |||

==== LZAP ==== | ==== LZAP ==== | ||

LZAP was created in 1988 by James Storer as a modification to the LZMW algorithm. The AP stands for "all prefixes" in that rather than storing a single phrase in the dictionary each iteration, the dictionary stores every permutation. For example, if the last phrase was "last" and the current phrase is "next" the dictionary would store "lastn", "lastne", "lastnex", and "lastnext". <ref name="refnum31">David Salomon, Data Compression – The complete reference, 4th ed., page 212</ref> | |||

==== LZWL ==== | ==== LZWL ==== | ||

LZWL is a modification to the LZW algorithm created in 2006 that works with syllables rather than than single characters. LZWL is designed to work better with certain datasets with many commonly occuring syllables such as XML data. This type of algorithm is usually used with a preprocessor that decomposes the input data into syllables.<ref name="refnum30">Chernik, K., Lansky, J., Galambos, L., "Syllable-based Compression for XML Documents", Dateso 2006, pp 21-31, ISBN 80-248-1025-5.</ref> | |||

==== LZJ ==== | ==== LZJ ==== | ||

Matti Jakobsson published the LZJ algorithm in 1985<ref name="refnum33">Jakobsson, M., "Compression of Character Strings by an Adaptive Dictionary", BIT Computer Science and Numerical Mathematics, Vol. 25 No. 4 (1985). doi>10.1007/BF01936138</ref> and it is one of the only LZ78 algorithms that deviates from LZW. The algorithm works by storing every unique string in the already processed input up to an arbitrary maximum length in the dictionary and assigning codes to each. When the dictionary is full, all entries that occurred only once are removed.<ref name="refnum12" /> | |||

=== | === Non-dictionary Algorithms === | ||

==== PPM ==== | |||

= | Prediction by Partial Matching is a statistical modeling technique that uses a set of previous symbols in the input to predict what the next symbol will be in order to reduce the entropy of the output data. This is different from a dictionary since PPM makes predictions about what the next symbol will be rather than trying to find the next symbols in the dictionary to code them. PPM is usually combined with an encoder on the back end, such as arithmetic coding or adaptive Huffman coding.<ref name="refnum34">Cleary, J., Witten, I., "Data Compression Using Adaptive Coding and Partial String Matching", IEEE Transactions on Communications, Vol. COM-32, No. 4, April 1984, pp 396-402. </ref> PPM or a variant known as PPMd are implemented in many archive formats including 7-Zip and RAR. | ||

==== | ==== bzip2 ==== | ||

bzip2 is an open source implementation of the Burrows-Wheeler Transform. Its operating principles are simple, yet they achieve a very good compromise between speed and compression ratio that makes the bzip2 format very popular in UNIX environments. First, a Run-Length Encoder is applied to the data. Next, the Burrows-Wheeler Transform is applied. Then, a move-to-front transform is applied with the intent of creating a large amount of identical symbols forming runs for use in yet another Run-Length Encoder. Finally, the result is Huffman coded and wrapped with a header.<ref name="refnum32">Seward, J., "bzip2 and libbzip2", bzip2 Manual, March 2000.</ref> | |||

==== | ==== PAQ ==== | ||

PAQ was created by Matt Mahoney in 2002 as an improvement upon older PPM(d) algorithms. The way it does this is by using a revolutionary technique called context mixing. Context mixing is when multiple statistical models (PPM is one example) are intelligently combined to make better predictions of the next symbol than either model by itself. PAQ is one of the most promising algorithms because of its extremely high compression ratio and very active development. Over 20 variants have been created since its inception, with some variants achieving record compression ratios. The biggest drawback of PAQ is its slow speed due to using multiple statistical models to get the best compression ratio. However, since hardware is constantly getting faster, it may be the standard of the future.<ref name="refnum35">Mahoney, M., "Adaptive Weighting of Context Models for Lossless Data Compression", Unknown, 2002.</ref> PAQ is slowly being adopted, and a variant called PAQ8O which brings 64-bit support and major speed improvements can be found in the PeaZip program for Windows. Other PAQ formats are mostly command-line only. | |||

== | == References == | ||

<references /> | |||

[[Category:Data compression|Lossless]] [[Category:Computing and electronics|Lossless]] | |||

[ | [[Category:Data_compression]] | ||

Revision as of 15:09, 20 August 2014

Introduction

There are two major categories of compression algorithms: lossy and lossless. Lossy compression algorithms involve the reduction of a file’s size usually by removing small details that require a large amount of data to store at full fidelity. In lossy compression, it is impossible to restore the original file due to the removal of essential data. Lossy compression is most commonly used to store image and audio data, and while it can achieve very high compression ratios through data removal, it is not covered in this article. Lossless data compression is the size reduction of a file, such that a decompression function can restore the original file exactly with no loss of data. Lossless data compression is used ubiquitously in computing, from saving space on your personal computer to sending data over the web, communicating over a secure shell, or viewing a PNG or GIF image.

The basic principle that lossless compression algorithms work on is that any non-random file will contain duplicated information that can be condensed using statistical modeling techniques that determine the probability of a character or phrase appearing. These statistical models can then be used to generate codes for specific characters or phrases based on their probability of occurring, and assigning the shortest codes to the most common data. Such techniques include entropy encoding, run-length encoding, and compression using a dictionary. Using these techniques and others, an 8-bit character or a string of such characters could be represented with just a few bits resulting in a large amount of redundant data being removed.

History

Data compression has only played a significant role in computing since the 1970s, when the Internet was becoming more popular and the Lempel-Ziv algorithms were invented, but it has a much longer history outside of computing. Morse code, invented in 1838, is the earliest instance of data compression in that the most common letters in the English language such as “e” and “t” are given shorter Morse codes. Later, as mainframe computers were starting to take hold in 1949, Claude Shannon and Robert Fano invented Shannon-Fano coding. Their algorithm assigns codes to symbols in a given block of data based on the probability of the symbol occuring. The probability is of a symbol occuring is inversely proportional to the length of the code, resulting in a shorter way to represent the data. [1]

Two years later, David Huffman was studying information theory at MIT and had a class with Robert Fano. Fano gave the class the choice of writing a term paper or taking a final exam. Huffman chose the term paper, which was to be on finding the most efficient method of binary coding. After working for months and failing to come up with anything, Huffman was about to throw away all his work and start studying for the final exam in lieu of the paper. It was at that point that he had an epiphany, figuring out a very similar yet more efficient technique to Shannon-Fano coding. The key difference between Shannon-Fano coding and Huffman coding is that in the former the probability tree is built bottom-up, creating a suboptimal result, and in the latter it is built top-down.[2]

Early implementations of Shannon-Fano and Huffman coding were done using hardware and hardcoded codes. It was not until the 1970s and the advent of the Internet and online storage that software compression was implemented that Huffman codes were dynamically generated based on the input data.[1] Later, in 1977, Abraham Lempel and Jacob Ziv published their groundbreaking LZ77 algorithm, the first algorithm to use a dictionary to compress data. More specifically, LZ77 used a dynamic dictionary oftentimes called a sliding window.[3] In 1978, the same duo published their LZ78 algorithm which also uses a dictionary; unlike LZ77, this algorithm parses the input data and generates a static dictionary rather than generating it dynamically.[4]

Legal Issues

Both the LZ77 and LZ78 algorithms grew rapidly in popularity, spawning many variants shown in the diagram to the right. Most of these algorithms have died off since their invention, with just a handful seeing widespread use today including DEFLATE, LZMA, and LZX. Most of the commonly used algorithms are derived from the LZ77 algorithm. This is not due to technical superiority, but because LZ78 algorithms became patent-encumbered after Sperry patented the derivative LZW algorithm in 1984 and began suing software vendors, server admins, and even end users for using the GIF format without a license. [5][6]

At the time, the UNIX compress utility used a very slight modification of the LZW algorithm called LZC and was later discontinued due to patent issues. Other UNIX developers also began to deviate from using the LZW algorithm in favor or open source ones. This led the UNIX community to adopt the DEFLATE-based gzip and the Burrows-Wheeler Transform-based bzip2 formats. In the long run, this was a benefit for the UNIX community because both the gzip and bzip2 formats nearly always achieve significantly higher compression ratios than the LZW format.[6] The patent issues surrounding LZW have since subsided, as the patent on the LZW algorithm expired in 2003.[5] Despite this, the LZW algorithm has largely been replaced and is only commonly used in GIF compression. There have also been some LZW derivatives since then but they do not enjoy widespread use either and LZ77 algorithms remain dominant.

Another legal battle erupted in 1993 regarding the LZS algorithm. LZS was developed by Stac Electronics for use in disk compression software such as Stacker. Microsoft used the LZS algorithm in developing disk compression software that was released with MS-DOS 6.0 that purported to double the capacity of a hard drive. When Stac Electronics found out its intellectual property was being used, it filed suit against Microsoft. Microsoft was later found guilty of patent infringement and ordered to pay Stac Electronics $120 million in damages minus $13.6 million awarded in a countersuit finding that Microsoft’s infringement was not willful.[7] Although Stac Electronics v. Microsoft had a large judgment, it did not impede the development of Lempel-Ziv algorithms as the LZW patent dispute did. The only consequence seems to be that LZS has not been forked into any new algorithms.

The Rise of Deflate

Corporations and other large entities have used data compression since the Lempel-Ziv algorithms were published as they have ever-increasing storage needs and data compression allows them to meet those needs. However, data compression did not see widespread use until the Internet began to take off toward the late 1980s when a need for data compression emerged. Bandwidth was either limited, expensive, or both, and data compression helped to alleviate these bottlenecks. Compression became especially important when the World Wide Web was developed as people began to share more images and other formats which are considerably larger than text. To meet the demand, several new file formats were developed incorporating compression including ZIP, GIF, and PNG.

Thom Henderson released the first commercially successful archive format called ARC in 1985 through his company, System Enhancement Associates. ARC was especially popular in the BBS community, since it was one of the first programs capable of both bundling and compressing files and it was also made open source. The ARC format uses a modification to the LZW algorithm to compress data. A man named Phil Katz noticed ARC's popularity and decided to improve it by writing the compression and decompression routines in assembly language. He released his PKARC program as shareware in 1987 and was later sued by Henderson for copyright infringement. He was found guilty and forced to pay royalties and other penalties as part of a cross-licensing agreement. He was found guilty because PKARC was a blatant copy of ARC; in some instances even the typos in the comments were identical. [8]

Phil Katz could no longer sell PKARC after 1988 due to the cross-licensing agreement, so in 1989 he created a tweaked version of PKARC that is now known as the ZIP format. As a result of its use of LZW, it was considered patent encumbered and Katz later chose to switch to the new IMPLODE algorithm. The format was again updated in 1993, when Katz released PKZIP 2.0, which implemented the DEFLATE algorithm as well as other features like split volumes. This version of the ZIP format is found ubiquitously today, as almost all .zip files follow the PKZIP 2.0 format despite its great age.

The GIF, or Graphics Interchange Format, was developed by CompuServe in 1987 to allow bitmaps to be shared without data loss (although the format is limited to 256 colors per frame), while substantially reducing the file size to allow transmission over dialup modems. However, like the ZIP format, GIF is also based on the LZW algorithm. Despite being patent encumbered, Unisys was unable to enforce their patents adequately enough to stop the format from spreading. Even now, over 20 years later, the GIF remains in use especially for its capability of being animated. [9]

Although GIF could not be stopped, CompuServe sought a format unencumbered by patents and in 1994 introduced the Portable Network Graphics (PNG) format. Like ZIP, the PNG standard uses the DEFLATE algorithm to perform compression. Although DEFLATE was patented by Katz[10] the patent was never enforced and thus PNG and other DEFLATE-based formats avoid infringing on patents. Although LZW took off in the early days of compression, due to Unisys's litigious nature it has more or less died off in favor of the faster and more efficient DEFLATE algorithm. DEFLATE is currently the most used data compression algorithm since it is a bit like the Swiss Army knife of compression.

Beyond its use in the PNG and ZIP formats, DEFLATE is also used very frequently elsewhere in computing. For example, the gzip (.gz) file format uses DEFLATE since it is essentially an open source version of ZIP. Other uses of DEFLATE include HTTP, SSL, and other technologies designed for efficient data compression over a network.

Sadly, Phil Katz did not live long enough to see his DEFLATE algorithm take over the computing world. He suffered from alcoholism for several years and his life began to fall apart in the late 1990s, having been arrested several times for drunk driving and other violations. Katz was found dead in a hotel room on April 14, 2000, at the age of 37. The cause of death was found to be acute pancreatic bleeding from his alcoholism, brought on by the many empty bottles of liquor found near his body. [11]

Current Archival Software

The ZIP format and other DEFLATE-based formats were king up until the mid 1990s when new and improved formats began to emerge. In 1993, Eugene Roshal released his archiver known as WinRAR which utilizes the proprietary RAR format. The latest version of RAR uses a combination of the PPM and LZSS algorithms, but not much is known about earlier implementations. RAR has become a standard format for sharing files over the Internet, specifically in the distribution of pirated media. An open-source implementation of the Burrows-Wheeler Transform called bzip2 was introduced in 1996 and rapidly grew in popularity on the UNIX platform against the DEFLATE-based gzip format. Another open-source compression program was released in 1999 as the 7-Zip or .7z format. 7-Zip could be the first format to challenge the dominance of ZIP and RAR due to its generally high compression ratio and the format's modularity and openness. This format is not limited to using one compression algorithm, but can instead choose between bzip2, LZMA, LZMA2, and PPMd algorithms among others. Finally, on the bleeding edge of archival software are the PAQ* formats. The first PAQ format was released by Matt Mahoney in 2002, called PAQ1. PAQ substantially improves on the PPM algorithm by using a technique known as context mixing which combines two or more statistical models to generate a better prediction of the next symbol than either of the models on their own.

Future Developments

The future is never certain, but based on current trends some predictions can be made as to what may happen in the future of data compression. Context Mixing algorithms such as PAQ and its variants have started to attract popularity, and they tend to achieve the highest compression ratios but are usually slow. With the exponential increase in hardware speed following Moore's Law, context mixing algorithms will likely flourish as the speed penalties are overcome through faster hardware due to their high compression ratio. The algorithm that PAQ sought to improve, called Prediction by Partial Matching (PPM) may also see new variants. Finally, the Lempel-Ziv Markov chain Algorithm (LZMA) has consistently shown itself to have an excellent compromise between speed and high compression ratio and will likely spawn more variants in the future. LZMA may even be the "winner" as it is further developed, having already been adopted in numerous competing compression formats since it was introduced with the 7-Zip format. Another potential development is the use of compression via substring enumeration (CSE) which is an up-and-coming compression technique that has not seen many software implementations yet. In its naive form it performs similarly to bzip2 and PPM, and researchers have been working to improve its efficiency.[12]

Compression Techniques

Many different techniques are used to compress data. Most compression techniques cannot stand on their own, but must be combined together to form a compression algorithm. Those that can stand alone are often more effective when joined together with other compression techniques. Most of these techniques fall under the category of entropy coders, but there are others such as Run-Length Encoding and the Burrows-Wheeler Transform that are also commonly used.

Run-Length Encoding

Run-Length Encoding is a very simple compression technique that replaces runs of two or more of the same character with a number which represents the length of the run, followed by the original character; single characters are coded as runs of 1. RLE is useful for highly-redundant data, indexed images with many pixels of the same color in a row, or in combination with other compression techniques like the Burrows-Wheeler Transform.

Here is a quick example of RLE:

Input: AAABBCCCCDEEEEEEAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

Output: 3A2B4C1D6E38A

Burrows-Wheeler Transform

The Burrows-Wheeler Transform is a compression technique invented in 1994 that aims to reversibly transform a block of input data such that the amount of runs of identical characters is maximized. The BWT itself does not perform any compression operations, it simply transforms the input such that it can be more efficiently coded by a Run-Length Encoder or other secondary compression technique.

The algorithm for a BWT is simple:

- Create a string array.

- Generate all possible rotations of the input string, storing each in the array.

- Sort the array alphabetically.

- Return the last column of the array.[13]

BWT usually works best on long inputs with many alternating identical characters. Here is an example of the algorithm being run on an ideal input. Note that & is an End of File character:

| Input |

Rotations |

Alpha-Sorted Rotations |

Output |

| HAHAHA& |

HAHAHA& |

AHAHA&H |

HHH&AAA |

| &HAHAHA |

AHA&HAH | ||

| A&HAHAH |

A&HAHAH | ||

| HA&HAHA |

HAHAHA& | ||

| AHA&HAH |

HAHA&HA | ||

| HAHA&HA |

HA&HAHA | ||

| AHAHA&H |

&HAHAHA |

Because of its alternating identical characters, performing the BWT on this input generates an optimal result that another algorithm could further compress, such as RLE which would yield "3H&3A". While this example generated an optimal result, it does not generate optimal results on most real-world data.

Entropy Encoding

Entropy in data compression means the smallest number of bits needed, on average, to represent a symbol or literal. A basic entropy coder combines a statistical model and a coder. The input file is parsed and used to generate a statistical model that consists of the probabilities of a given symbol appearing. Then, the coder will use the statistical model to determine what bit-or-bytecodes to assign to each symbol such that the most common symbols have the shortest codes and the least common symbols have the longest codes.[14]

Shannon-Fano Coding

This is one of the earliest compression techniques, invented in 1949 by Claude Shannon and Robert Fano. This technique involves generating a binary tree to represent the probabilities of each symbol occurring. The symbols are ordered such that the most frequent symbols appear at the top of the tree and the least likely symbols appear at the bottom.

The code for a given symbol is obtained by searching for it in the Shannon-Fano tree, and appending to the code a value of 0 or 1 for each left or right branch taken, respectively. For example, if “A” is two branches to the left and one to the right its code would be “0012”. Shannon-Fano coding does not always produce optimal codes due to the way it builds the binary tree from the bottom up. For this reason, Huffman coding is used instead as it generates an optimal code for any given input.

The algorithm to generate Shannon-Fano codes is fairly simple

- Parse the input, counting the occurrence of each symbol.