ETA Systems Hardware Technologies (1983-88): Difference between revisions

No edit summary |

No edit summary |

||

| Line 261: | Line 261: | ||

A missing link to the team was the logic design. These folks were separate and actually on another floor of the ETA Systems facility. It was strongly suggested and accepted for future designs, that the logic team would be a part of this common organization. I had the opportunity to lead one additional hardware development that included the logic design team (at Cray Research, not ETA Systems) later. It was a smoother and more effective and thorough team. Like ETA Systems the communications were open and included both manufacturing and software participation (the later two were voluntary). | A missing link to the team was the logic design. These folks were separate and actually on another floor of the ETA Systems facility. It was strongly suggested and accepted for future designs, that the logic team would be a part of this common organization. I had the opportunity to lead one additional hardware development that included the logic design team (at Cray Research, not ETA Systems) later. It was a smoother and more effective and thorough team. Like ETA Systems the communications were open and included both manufacturing and software participation (the later two were voluntary). | ||

Clearly, communications – effective communications at all levels of the organization was key to this hardware design success. | Clearly, communications – effective communications at all levels of the organization was key to this hardware design success. | ||

[[Category:Computers_and_information_processing]] | [[Category:Computers_and_information_processing]] [[Category:Computer_architecture]] [[Category:Components,_circuits,_devices_&_systems|Category:Components,_circuits,_devices_&_systems]] [[Category:Integrated_circuits]] [[Category:CMOS_integrated_circuits_&_microprocessors|Category:CMOS_integrated_circuits_&_microprocessors]][[Category:News]] | ||

[[Category:Computer_architecture]] | |||

[[Category:Components | |||

[[Category:Integrated_circuits]] | |||

[[Category:CMOS_integrated_circuits_ | |||

Revision as of 15:22, 4 August 2009

ETA Systems Hardware Technology

Preface

ETA Systems Inc. was spun off as the Supercomputer subsidiary from a struggling Control Data Corporation (CDC). The objective was to develop and manufacture High Performance Computers or commonly called in the 80’s and 90’s simply Supercomputers. Cray Research Inc. dominated this market during this time frame and CDC had a minor market position introducing the Star-100 followed by the CYBER-203 and CYBER-205 systetms. Novel architecture (fast scalar performance and the efficient use of vectors), innovative software and highest performance integrated circuit (resulting in the fastest clock period), innovative packaging (to optimize device spacing and thermal management) differentiated Supercomputers from conventional computer systems during this period. It must be stated to be "fair and balanced" that Supercomputers also had the highest price tag and demanded the largest memories and highest performance peripherals and system bandwidths. Systems dominating the market during the 80’s were the Cray-1, Cray XMP and CYBER-205. NEC, Fujitsu and Hitachi also developed systems in this market. The word Supercomputer was applied to other products as well. It is not intentional to dismiss their recognition.

The following overview will not enter into the decisions to separate ETA Systems from Control Data Corporation organizationally, although that topic is interesting as well. Nor will the following discuss software innovations at ETA Systems – and there were many. Architecture had a role in dictating the technology in terms of number of logic circuits that were serial per clock cycle. Architecture also demanded high performance large registers (temporary storage devices) to be included which also dictated performance (clock cycle) of the system. Other architecture features (instructions) dictated the number of functions that constituted a processor (gates / CPU) that, in turn, determined technology selection from a point of preferred Gates per Chip and Ports per Chip. Proximity of chips to each other for processor design was crucial during this time period since a CPU could not reside within the boundary of a single chip as it easily does today. Bandwidth, i.e.; number of bytes per unit of time that could be moved between functions within the CPU and the CPU and associated memory is key and places demands on pins or logic paths between functions that usually requires compromise in each and every design. Those reading this now find this humorous I am sure with multiprocessing units (multi CPUs) now residing within the boundaries of a single chip or IC die. In the 80’s and well into the mid 90’s, however, a CPU processor partitioning of necessary logic or Boolean functions on multiple integrated circuit chips (usually multiple hundreds of chips) and multiple complex printed circuit boards (2 to 8) was an integral part of determining the overall performance, power consumption, cost and reliability of the system.

Introduction

ETA Systems technology was selected in 1980 (the organization was the Advanced Design Laboratory of Control Data Corporation at the inception) with the following objectives:

1. The highest performance Supercomputer at the time of product delivery

2. The most cost effective technology available

3. The lowest possible power consumption while meeting other objectives

4. The largest product diversity with a single design

5. The highest possible reliability. pins and interconnects usually dictated the reliability since by that time Integrated Circuit technology reached a very high reliability for both logic and storage devices

6. Leverage as much of the technology as possible to the follow-on computer generation. This usually fell by the wayside until ECAD and MCAD technologies were introduced into the design.

7. Utilize only standard IC technology processes being developed for other markets

8. Demonstrate the prototype of the product in less than four years

Digging deeper into objectives

The highest performance Supercomputer at the time of product delivery

Simply stated, the highest performance processor solved the largest problems most effectively. Performance was usually measured in clock cycle that was unfortunate since variable amounts of calculations could be made per clock cycle. This single parameter was the bragging rights although later Gigaflops became the stated parameter and that also did not necessarily reflect the true performance of a supercomputer. The “king of the hill” at any given cycle (usually 2 to 4 years) held the largest market share.

The most cost effective technology available

The most “bang” for the buck applied to Supercomputers as well as other markets. The customer was willing to get the highest performance when solving his particular challenges as a higher priority provided that the performance clearly exceeded lower cost alternatives. A legend of the Supercomputer industry - Jim Thornton - once described the requirement as getting through an intersection without having an accident. Since a Supercomputer required so many components and interconnects, they were bound to fail more rapidly than small computers. So - Jim surmised, the faster the computer, the more things that could be solved before something went wrong. Go through an intersection as fast as possible - not at a slow rate and you have a better chance of getting through safely.

The lowest possible power consumption while meeting other objectives

Each follow-on generation of Supercomputer products witnessed increased power per processor, which was justified by the resultant performance realized. (The lower the RC time constant (resistance - capacitance) the faster the computer clock cycle.) Since the lower the R, the higher the power, this was a trend. As multi-processor units increased per system the power consumption became a major issue; the largest users for site related “wall plug” power capacity limits and for the small users for basic life-of-system cost concerns (mainly power consumption, cooling and system reliability).

The largest product diversity with a single design

Design cycles were three to five years in duration and large teams were assembled to complete a single design. Development costs per product were significant. Product cost ($2M to $40M per system) and performance ranges (greater than 20:1) for each generation of Supercomputers were increasing. Since optimized cost points for each product were technology dependent, this required multiple design teams – each design utilizing a unique technology. Desire to utilize a single total design, i.e., packaging, IC selection, manufacturing tooling, and associated boards, connectors, etc. was desirable, therefore, for a myriad of obvious reasons. In fact, most companies were focused on only a portion of the computer market and dedicated to only a small portion of the product "bandwidth". Cray Research focused on the high end, IBM, CDC, Unisys and others did an admirable job in the middle and companies like DEC and HP were at the lower end. There were others too across the world, but these companies are only examples.

The highest possible reliability

Supercomputers required significant bandwidth to pass data between processing units and processors and memory. Bandwidth is a key differentiator that separates true Supercomputers from conventional computer systems. Interconnects were and still are dominant reliability concerns in large systems. Thermal management is also significant. Large systems, by the nature of the design require a large quantity of components to be simultaneously functional. Each interconnect and each active logic device (integrated circuit) requires the highest reliability to permit large user problems to be solved using Supercomputers. Simply stated; Supercomputer operational time had to exceed the size of the largest customer problem.

Leverage as much of the technology as possible to the follow-on generation

Significant cost for development of a given technology “kit” had to be leveraged for as long a period as possible. It was a “given” that most Integrated circuits would be developed for each generation of computer. What about interconnects (connectors)? What about printed circuit boards? What about support technologies like simulation tools, assembly tooling and basic packaging? Can any of these technologies extend to the next generation? And, are there any “mid life kickers” that could be inserted into a successful product to extend its market life? IBM did exceptional work in taking hardware across product boundaries and generations of new products. The initial tooling for packaging was expensive but results in later products appeared to prove dividends. Cray Research Inc. and Control Data, by contrast, generated new packaging and connector technology with each new generation of product.

Utilize only standard IC technology processes being developed for other markets

This addresses two major issues, cost and access to technology. Cost, since dedicated IC processing lines with unique processes for low volume products – even if it could be realized – would not allow effective amortization of costs for process development and manufacturing. Access to technology addresses advanced popular (high volume) processes to accommodate unique system designs. Innovation in the IC industry was and is applied to the highest return on investment markets. The “trick” was to apply this ”standard” and most innovative technology to low volume Supercomputer applications.

Demonstrate operating product prototypes in less than four years

This requires discipline as well as good management and leadership. Due to the complexity of Supercomputers, we felt that tools had to be upgraded significantly and checkout and diagnostics improved as well. At any given time improvements are made in Supercomputer technologies. Allowing each incremental development to be incorporated extends the product development cycle significantly. Selecting known (proven) technologies at the time of product development initiation results in a non-competitive product. Risk must be taken. What areas to take risk (technologies that are not available at product initiation but look the most promising) as well as the return on the risk must be carefully evaluated with factors clearly understood. Where to invest in new technology development must be understood as well as the return on investment and the leverage of investment where other markets are interested in common technologies must be understood. Back-up alternatives should be identified. The CEO of Cray Research - Jon Rollwagon - defined the challenge as "How many 3-point shots should each project take?" Missing market cycles is costly. Under reaching (conservative) and over-reaching (bad choices in “betting on the come”) were also costly and prohibitive. All of these factors were carefully evaluated. It might be added, using the same basketball comparison, cannot have too many “lay ups” (sure things already developed) either!

Results

Before getting to the details as to how decisions were made and how the ETA System technologies the “kit” was selected and developed, a list of noteworthy accomplishments achieved are listed:

First Industry competitive CMOS Supercomputer CPU

Since 1995 – to the present (beginning 12 years after the technology selection by ETA Systems I might add) ALL HPC (High Performance Computers) are developed and manufactured using CMOS IC technology. Until as late as 2000, bipolar technology (higher power, more costly to manufacture and lower gate count per chip) dominated high performance computers throughout the world.

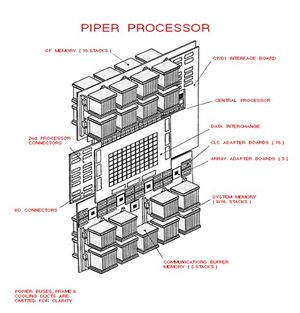

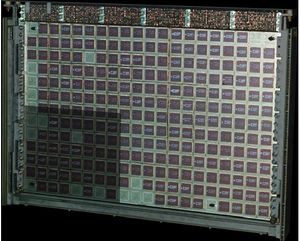

First Industry Single Board CPU

The chip density (gates per chip) allowed by advanced CMOS, the use of layout and design Computer aided design tools for optimum layout and simulation, the successful design of a 45 layer advance Printed Circuit board (you read it right 45 layers) and innovative chip attachment and cooling permitted a single processor containing nearly 3 million gates to be packaged on a single board

First Industry system to be designed with self-test

CPU Processing units (≈3Million gates each) were validated for functionality and performance in less than 4 hours. Any interconnect errors were recorded and allowed chip-to-chip replacement to occur in a minimal time. Other CPU checkout during this same period required weeks to months to check out and validate a processing unit. Incoming testing of the logic IC Chip (function and performance) also used the same self-test innovations.

First Industry production Liquid Nitrogen CPU

The ETA Systems CPU was immersed in Liquid Nitrogen – 77 degrees Kelvin – to improve performance greater than two times that CMOS technology operated at room temperature – 300 degrees Kelvin.

First system at CDC to fully utilize Computer Design Software to design Chips, boards, validate Logic design and Auto Diagnostic test the system with Synergistic tools

Permitted checkout of a CPU to be completed in less than 4 hours. Manufacturing costs were greatly reduced. This technique was also used at the IC Supplier and greatly reduced any probe test hardware and software.

First Industry system to have multiple cost designs from single design effort

Performance range of the ETA System products was greater than 24:1 (8 processor system operating at 7 nanoseconds Clock period and a single processor system operating at 24 nanoseconds.). Processors were manufactured, tested and validated from a single manufacturing line using identical components. (IC Chips were performance sorted using auto self test). Product differences began at the system packaging level.

Boring into the Details

Any Technology kit must be driven by a customer need. In the case of Supercomputers the craving for increased computer performance at a lower cost (overall cost) was the deciding factor. In any Supercomputer company a combination of marketing requirements, architecture innovations and logic design demands dictate the initial objectives of the hardware circuit and packaging organization. I state “initial” since once the objectives are digested and key technologies are evaluated for the time frame addressed, compromises are the norm. In the case of ETA Systems technology selections in the early 1980’s, this was the strategy implemented.

The following paragraphs sequence the thought process and the technology selection strategy utilized.

Integrated Circuit selection

The objectives, listed in earlier paragraphs were first integrated into the architecture and logic design requirements. A market survey of key integrated circuit suppliers was conducted with emphasis on what was in development and planned for product introduction – not what was available at the time of the survey. A risk assessment was made. Primary focus was on the most dynamic technology, the IC Logic technology. All decisions as to volume requirements, pins, packaging, etc. resulted from what was determined by this survey and risk analysis. Merging the logic design objectives (gates, bandwidth and performance of key functions) was next.

An ECL (emitter coupled logic) high performance bipolar gate array using Motorola advanced IC technology was selected. Since Motorola was not fully staffed to begin the actual product development (application) but did have the process development underway, a cooperative development agreement was struck with the two companies (this occurred between Motorola and Control Data since ETA Systems had yet not been formed). The design called for basic logic cells to be incorporated into a larger version of their existing gate array advancing the process for increased performance and chip size for increased gate capacity. The existing gate or function array utilized approximately 2,500 gates (which was used as the primary gate array for the Cray Research very popular Y-MP Supercomputer) and the planned gate array would contain an excess of 8,000 equivalent gates.

Logic cell libraries were agreed to (acceptable to both Motorola for the general market and to CDC for the logic designs). Pin counts (for power, ground and input/output logic communications) were established and power consumption estimates were made. Once these parameters were established, board size, power systems and thermal control were evaluated in a trade off give-and-take. Features of Printed Circuit Boards, (line widths, spacing, interconnect vias and number of layers were compared to the board size capacities, laminating press capabilities, drill designs and printed pc board processing limits. IC packaging, limits, i.e.; minimum size of package, pin spacing, thermal removal, etc. was evaluated in parallel with PC Board limits.

The chip design began, the cell library began and the packaging began once all parameters (pins, power consumption and die size objectives) were agreed to. Printed circuit board experiments also began. Once feasibility was established and practical limits established (original goals could be met as to physical design and performance based on IC Modeling and extrapolation from previous established functional systems, a preliminary specification was presented to the architects and logic designers for review.

From initial design data, logic design based on the parameters provided established a physical size for the Central Processing unit or CPU, the heart of the system. A multiple board processor was required. This placed additional constraints on packaging since within a single processor all distances are crucial between circuits. Three-dimensional packaging concepts were considered. Three dimensional packaging effectively meant a “sandwich” effect of multiple boards with interconnects from board to board were throughout the area – not exclusive to the periphery of the board such that chips on each of the boards would minimize distances between them. In addition, power consumption estimates were made; thermal removal paths and techniques were considered. A cost model was generated as well. All of these factors resulted in a preliminary estimate of the CPU volume. In the introduction portion of the document, you already know that this was rejected - more to follow for sure.

In parallel with these efforts, memory design was underway. Less freedom was available to memory since the basic semiconductor device could not be altered to accommodate specific users. There were a few packaging alternatives, very few, and device configurations (Word – Bit architecture, pin numbering, power considerations, etc.) were dictated by the industry. Since memory design has its own objectives for cost, reliability and performance, this effort could continue quite independently with one exception, the packaging of the total system must be synergistic and compatible. A crucial parameter of this is the interconnect mechanism between processors and memory.

A hardware system cost model was established – not only for current cost considerations but also estimates on volume costs based on learning-curve estimates as well for the life of the system.

The chief architect, after careful review, rejected the design; this was covered in the introduction. Three key reasons were sited; performance would be impacted due to the 8,000 gate limit, (worst case logic paths could not reside in a single chip and multiple chip distances would increase the clock period), power consumption per CPU, although lower on a performance ratio basis to previous generations, was too high when the total system size (including the multiprocessor objectives) were considered and system cost appeared prohibitive – always a subjective issue but never-the-less a key component of the design. Reliability concerns were also stated since the pin-count per CPU, although quite reduced from previous designs, were of concern. The architecture was committed to four CPUs (max) per system so the interconnect "bar" was raised.

Mini tutorial

Bipolar technology refers to conventional NPN and PNP transistors operating in a non-saturating mode (collector-base). By not saturating the operating transistors (not allowing the base voltage being higher than the collector voltage) the switching characteristics were improved and balanced (off logic level and on logic levels had identical delays). In addition, the non-saturating circuitry – titled ECL for Emitter coupled logic – provided the TRUE and COMPLIMENT outputs for each logic function (i.e.; AND & NAND, OR & NOR, etc). This provided advantages to logicians to design complex Boolean functions (ADD units, MULTIPLY units, DIVIDE units, etc). Under the category of “no free ride” ECL circuitry consumed higher power than the more popular but much slower saturating logic circuitry (TTL – transistor-transistor logic). Other improvements in performance for integrated versions of ECL logic circuitry included replacing conventional junction isolation between circuit devices on a single die with Oxide isolation between circuits (lower capacitance per circuit so less charging and discharging when logic levels switched).

CMOS (Complementary Metal Oxide Silicon) circuitry, especially at the time of ETA System, was a simpler and more efficient logic circuit. This form of logic also had a simpler process. Stacking of P channel and N channel transistors in series between voltage bus rails defines a single complementary gate. Functionality of the logic devices is much more forgiving to process variations due to the larger voltage swing and only active transistors used to define the circuitry (no resistors, diodes, etc.). The physical size of a logic function when compared to a bipolar equivalent is significantly smaller, resulting in an increase in circuitry per equivalent die (chip) size. CMOS technology also consumed power ONLY when the circuit was switching (changing states) so power consumption was directly proportional to the frequency it was operating. (P = CV2f)

ECL circuitry, by contrast, consumed approximately the same power – while switching or in a quiescent state. (Later forms of CMOS – especially those designed in early 2000 and beyond, had increased power consumption primarily caused by increased bulk leakage currents as a result of processes developed for lithography having features en excess (smaller) than 90 nanometers. Technology at the time of the development of the ETA Supercomputers had minimum features of 1,200 nanometers. (In 2009, by contrast, the production capability is 45 nanometers)

Advantages of CMOS were obvious; more circuits per given chip area, lower power consumption and higher functional yield. It is important to stress “functional yield”. The CMOS devices functioned over a much larger range of processing variations (> 50% Vs. < 15% to 25% for ECL).

Performance variations for a given process were approximately 2 to 3 times for CMOS and 20% to 30% for ECL. For this reason CMOS devices were sold at a much lower performance than any bipolar counterpart. (I.e.; if the product was specified to accommodate the entire functional lot (wafers processed at the same time), more IC devices yielded. There is one other key difference in defining performance differences between Bipolar and CMOS devices. For ECL (or any other bipolar device) the maximum operating frequency is defined, in part, by the base width – the physical distance between the emitter and collector of the transistor. This is determined by the spacing based on diffusion or implant of the emitter and is controlled in the vertical direction and limited by process control that is quite precise. This parameter is very thin and the frequency is determined indirectly proportional to the base width. For CMOS the gate length defines the critical performance parameter. Gate length is defined by mask optic limitations for any generation of processes. Bipolar devices in the 1980’s and well into the later half on the 1990’s, therefore, had higher operating maximum frequencies than their CMOS counterparts. As capital equipment – primary optics to generate masking and etching capabilities defined smaller and smaller geometries, CMOS technology improved dramatically in performance. This was a result of smaller gate lengths but also each generation had smaller devices resulting in lower capacitance loading and lower time constants to charge and discharge. During the time of the ETA Systems Supercomputer development, CMOS technology had not seen the advantages that bipolar devices could realize – but the potential for future improvements was obvious and projections clearly indicated that by the second half of the 1990’s (nearly 10 years after the first ETA Systems Supercomputer would be available), CMOS would overtake Bipolar in the last and most important parameter – performance. To restate this; the IC industry was transitioning to CMOS technology and more funding at the device, and equipment level was being expended to accommodate new markets focused on potential of CMOS than was being expended for Bipolar devices.

Bipolar technology was stretched to a practical limit for the time frame in question.

The IC industry, therefore, had only one other technology candidate, CMOS, which was, in 1983, used exclusively for lower cost and considerable lower performance applications and memory device technology where more bits per die could be fabricated at the expense of lower performance of the Bipolar counterpart(s). The impressive characteristic of CMOS technology at this time was: Lower power consumption per function, smaller size per logic function and lower cost per die due to two key factors (smaller physical size per function meant more logical functions per unit of area, and higher chip yield – chip functionality per wafer manufactured – due to reduced number of processing steps to generate CMOS devices. That was the good news. The concern was system performance. While bipolar technology had set the standard for clock periods of 10 NSec for Supercomputer architectures such as the ETA System projection, CMOS was at least 5 times slower – in most cases 10 to 20 times slower for equivalent architectures. Based on this parameter alone, CMOS was not a candidate for Supercomputers in the 1999-1990 time frame (the time frame where the ETA Systems Supercomputer would be in high volume production).

The next steps for CDC (recall that at this time CDC still had a Supercomputer Division) were dramatic and at times emotional. First, the team had to discard the ECL design and terminate the effort with Motorola. This was very difficult since both companies depended on each other and secondly, all objectives of the ECL product were being met within the specifications established. CDC (team which later became ETA Systems) provided Motorola with all of the design details to date. Considerable effort was made to insure that the program was successful at Motorola.

A sidelight to this discussion – Motorola completed this product as an industry product. Cray Research Inc. (the key competition and leader of the Supercomputer market) engaged with Motorola to successfully complete this complex IC development for a product announced in the late 1980’s. The product (Cray C-90) under the leadership of Les Davis, Steve Nelson and other notable scientists (a key circuit designer was Mark Birrittella), became another very successful supercomputer products developed and manufactured by Cray Research Inc.

Next, a full effort evaluation of all technology candidates occurred. CMOS futures were explored in depth. GaAs technology was also evaluated. Alternative ECL (bipolar) candidates were also considered. CMOS was viewed as the technology of the future but the future was beyond the time frame necessary for product introduction.

The following paragraphs summarize key events that led to the decision to use CMOS technology.

Moore’s law (invented by the great innovator and co-founder of Intel – Gordon Moore) stated that IC technology (CMOS) technology, would double in performance and density every 18 months to two years. The actual Moore’s law may have been stated somewhat differently but this captured all the project cared about. To achieve this predicted growth, several parameters had to occur:

- The die size would increase (more gates per manufactured chip). - Features on the chip (metal widths and spaces to interconnect devices and actual device parameters) would be reduced every 16 months to 2 years. Reducing parameter sizes have two positive results to goals of ETA Systems: increased performance and more gates per die. - The technology would gain popularity – this would mean that capital equipment would keep pace with the “law”, applications would increase thus increasing volume, thus lowering cost and increasing performance and more applications and industries would drive CMOS technology – the Supercomputer industry could not drive such a large industry.

Key industry activities also emerged at this time

1) CDC validated operational performance gains operating CMOS technology in a cryogenic environment. Several ring counter configurations generated with the 5,000 gate chip discussed earlier were dipped in a Liquid Nitrogen thermos jug expecting to witness the shattering of the silicon and the detachment of the solder joints attached to the oscilloscope only to find the frequency of the ring oscillators double and the system operate for weeks until we turned off the experiment. Analytical analysis applied to the Silicon design validated the research done previously by others.

2) Key US Government agencies began a technology acceleration program based on CMOS technology – the Very High Speed Integrated Circuits (VHSIC) program under direction of the Army, Navy and Air Force certainly captured our attention.

3) Honeywell, one of the participants in the VHSIC program held a technology luncheon IEEE symposium in which they presented an 11,000-gate CMOS development effort. Attendees from CDC were impressed (especially the key designer – Randy Bach - with what the efforts. The chip was certainly larger than any that had been developed to date and the performance was accelerated beyond what was predicted for the 1988 time frame by the conventional IC industry (the introduction date set for the ETA Systems Supercomputer – then the next generation CDC Supercomputer). Honeywell was a recipient of one of the VHSIC contracts..

4) Logicians and architects back at CDC - led by Neil Lincoln (chief architect), Ray Kort, Maurice Hudson and Dave Hill and others - determined that an minimum gate density of 15,000 gates per die would allow them to achieve a key objective; having a worst case Register to Register clock path residing within a single chip. Now additional explanation is required here. There were technical reasons that the logicians wanted more beyond the knee jerk reaction that asking for 50% more than offered was a standard mode of operation for these guys. Each architecture configuration has a method of achieving its goals of applying computational instructions to problems. The number of gates that are connected in serial fashion between the input and output registers (and this is truly simplifying the problem) determine the clock period that is allowed. For the ETA Systems Supercomputer, therefore, it was determined that a functional unit clock period could reside within the boundary of the chip if the chip could provide 15,000 gates of logic to the designer.

5) Research into technology experiments uncovered significant performance features of CMOS technology. First of all, the technology was functional across a wide range of voltages and temperatures but performance was significantly altered. The higher the operating voltage (within semiconductor constraints, of course) the higher the performance resulted. Unfortunately the Power consumption, although significantly lower than any alternative technology, increased as the Square of the operating voltage. The lower the operating temperature of CMOS the higher performance as well. This factor was studied by others and carefully documented from 400 degrees Kelvin (100 degrees above room temperature) to 77 degrees Kelvin. (77 Degrees Kelvin is the boiling point temperature of liquid Nitrogen.)

So, let’s summarize what was learned with this evaluation:

- IC chips currently (four years before the need for an ETA Systems product) had a capacity of 11,000 gates. - The performance of these gates, when operated at liquid Nitrogen temperatures, would perform at least two times faster than at room temperature – not yet validated at CDC. - 15,000 useable gates were required per chip to meet logic designer chip boundary requirements. - If Moore’s law was applied to these parameters, within the time frame required, it was possible to achieve both gates per chip densities and performance goals (if the system operated in a liquid Nitrogen environment). - There were at least two IC Suppliers (those having contracts with the US government) that were pursuing CMOS as a performance and high gate/chip density technology (the other known corporation was TRW).

Computer Aided Design (CAD) tools were, during the period of the 80’s, in the infancy stage if one was to compare them to today’s capabilities. To design, place cells within the matrix of the gates provided on the IC Chip, and route the interconnections of these cells accurately to the logic or Boolean design required by the logicians and to clock period constraints was a challenge. This challenge applied to board layout designs as well. Control Data Corporation (CDC) recognized the challenges and established a small but efficient and dedicated organization to address these challenges. The industry had established a metric that to use CAD tools for gate or cell arrays, an additional 20% to 30% gates were required. This meant if the ETA Supercomputer required at least 15,000 useable gates to accomplish necessary designs based on its architecture, an 18,000 to ≈20,000-gate capacity was required. The technology organization set at its objectives a design of 20,000 gates plus necessary circuitry to self-test each gate or cell array. This as compared to the gate array in development at Honeywell was nearly 2 times the capacity (11,000 total gates Vs. 20,000 total gates plus circuitry for self test).

The task was to convince Honeywell to project the next generation size and layout rules and to accept an R&D effort that would allow CDC / ETA Systems achieve its objectives. Honeywell, an innovative organization, took on the task after considerable discussion with key requirements:

a) ETA Systems (we were now ETA Systems by the time these discussions reached negotiations) accept costs based on wafers processed, not functional chips. Honeywell would provide necessary processing data to reflect wafers were processed within process parameter specifications.

b) ETA Systems provide test equipment for wafer testing and test parameters for chip acceptance prior to packaging.

c) Both companies would share facilities and key resources and work as a single team – as “open a Kimono relationship” that one could ever imagine during this dynamic period of complex process developments within the IC Industry. – David Frankel was assigned the task as ETA Systems interface and engergetically took on the challenging task.

Self-test circuitry was designed into the basic cell array periphery. The area consumed by this custom set of pseudo-random generated logic and registers was less than 15% of the total chip area. (David Resnick, resident do-it-all reduced concepts explored by ex CDC scientist Nick Van Brunt who left the company a year previous to the formation of ETA Systems.) This was one of many extra ordinary contributions David made to ETA Systems. Additionally to providing self test capability to accept or reject the circuitry – both functionality and performance sorting – the circuitry included in each 20,000 gate array had capability to test for interconnect between circuits on the final PC Board as well as circuit to I/O connections.

When the logic design team first heard of this area “waste” of test circuits that could be used for logic design, they lobbied for it to be removed in favor of more logic gates for function designs. Fortunately this request was not honored. IC validation at both the supplier in wafer form and at ETA Systems in packaged chip configuration coupled with the use of the same circuitry in manufacturing checkout to detect board opens and shorts between circuits assembled both in room temperature and cryogenic temperature environments proved to be well worth this “waste” of circuitry area. Small, relatively inexpensive testing systems were designed by ETA Systems and provided to the supplier. The operands for initialization of the pseudo-random logic were also supplied for each design (chip type).

Chip types (array design options) were carefully managed as to not proliferate the chip types in the system. This was a new constraint placed on logic designers and was dealt with most professionally and responsibly by all participants once understood. The resultant chip total for the CPU (processing unit) was fewer than 150 while the chip types including clock chips and all logic design chips was fewer than 20 as best recalled.

During the development cycle of the ETA System Supercomputer, Honeywell moved the manufacturing capability from a local Minneapolis facility to a state-of-the-art manufacturing facility in Colorado Springs, CO. The transition was very transparent to ETA Systems (with the exception of the traveling budget, of course). To accomplish this team membership from both companies acted as one in all decisions addressing scheduling and timing of needs of various chips, testing, packaging, etc. The open book relationship was very beneficial to both companies. On one milestone occasion – where Honeywell successfully completed an initial order – Dave Frankel and I visited Honeywell, some 30 miles from the ETA Systems facility, and served cake and coffee to all designers and operators – it was below zero when this milestone was reached and no one cared.

One design that was incorporated into the chip was to allow for next generation critical processing parameters to be added to the existing design (present chip layout). Although this would not optimize the features of new process features (all parameters were not considered), key performance enhancements could be and were added to the present design. A key feature was gate length and this was added transparently to the physical chip and offered appreciable performance enhancements to the design.

Chip design summary

The decision to utilize CMOS technology for the ETA Systems Supercomputer in the 1985 – 1988 time frame (prematurely by all industry metrics) resulted in the following additional “technology kit” decisions:

1) Addition of chip self-test. Feature established functionality at wafer test and functionality and performance sorting at ETA Systems 2) Computer Layout tools that validated logic prior to chip release for fabrication 3) Requirement to operate the chip at 77 degrees Kelvin or in liquid Nitrogen 4) Packaging, interconnect & assembly decisions based on liquid Nitrogen operation challenges 5) Remote testing of the CPU because of liquid Nitrogen operation challenges 6) Logic design partitioning challenges to design within 15,000-gate per chip boundaries and a minimum of IC chip types

Printed Circuit Board Design Selection

In the period of the 1980s, the time frame of the ETA Systems Supercomputer development, Printed circuit boards had maximum dimensions of approximately a square foot and the number of total layers fewer than 20. (Layers provide power and ground stability, interconnect capability for the circuits attached to the board as well as inputs and outputs to and from the board.) If these total layers are allocated properly, approximately 50% are used for interconnect and the remaining for power and ground. Positioning of power and ground layers also serve to provide interconnect layers that have transmission line capabilities to insure signal integrity throughout the board. During this period, a state-of-the-art printed circuit board was approximately one square foot of active circuitry and as stated earlier, 20 layers or fewer usually restricted to a total thickness of 0.063 inches.

It was determined that a maximum of 150 chips would be required to design the ETA Systems Supercomputer CPU. Packaging of the IC and interconnecting the chip to a PC board with minimum spacing between chips (some spacing was required to allow interconnects to all of the necessary layers) resulted in a 1.2x1.2 sq. inch “footprint”. Doing the simple math results in a pc board of a minimum of 220 sq. inches. The number of total layers required to interconnect the 150 chips and the necessary Input and Output at the board periphery was determined to be 45. Looking at design parameters of the board layers in more depth and insuring transmission line features to insure signal integrity defined the board thickness at slightly greater than 0.25 inches. This thickness was approximately three times greater than high-end printed circuit boards produced in this time frame. With a board having an area of greater than 1.5 times the size of what was able to be produced, a thickness of 300% of what was produced and a the number of layers 2.5 times of what was produced in this time frame it was clear that the printed circuit board industry was not ready for the ETA Systems design! The design has other limitations. A key factor when designing pc boards is to insure proper connecting of the layers, i.e.; connecting the chip pins to the board and the proper layer of interconnect in the board and back to the proper receiving chip. Drilling holes in the layers and plating the wall of the holes with copper for conduction make these connections. These are called plated thru holes or PTH. A key parameter to insure that plating occurs in these holes is the hole diameter to depth ratio. The industry at this period (not much better today) is 6:1, i.e.; the thickness of the board must be no more than 6 times the diameter of the hole. This ratio would dominate the size of the board. If this ratio is used to design the board the board size would be increased in area by greater than 9 times. Talk about piling on! Since it was deemed not feasible, issues like cost and time to fabricate the board were not even addressed.

Nestled into the design laboratory of Control Data Corporation was a small but very innovative printed circuit board prototype facility. The leader of this group, LeRoy Beckman, never said “no” to challenges. He just bit his pipe a little harder and tried not to snicker out loud. LeRoy kept his eyes and ears out for innovative alternatives to conventional board fabrication techniques and had previously displayed innovation (evolutionary in nature) in previous generations. Embedded termination resistors in layers was one invention he brought to CDC when resistor termination took up too much board area; finer features than the industry was producing another, and higher plated through hole (pth) ratios than the industry a third.

New technologies in the printed circuit board were few and far between. The industry was set in it’s ways of subtractive etching of circuit layers (removing unwanted copper from a pre-copper clad layer, convention wet etch processes and relatively simple assembly, i.e.; lamination of layers with pressure. One inventor, Mr. Peter P. Pellegrino, arrived on the scene to discuss innovative, revolutionary and proven pc board processing. At first the claims appeared to be too good to be true. Board size relatively independent, aspect ratios exceeding 20:1 for PTH, an additive process that permitted finer lines to be fabricated on individual layers. The layers were also embedded into the laminate so the opportunity for higher yield with reduced features. An additional benefit of additive plating is reduction in waste and water usage.

A special plating cell was also introduced that permitted uniform deep hole plating by forcing plating fluid into each of the thousands of PTH. The process titled “Push-PullTM” also accelerated the plating manufacturing cycle by over an order of magnitude, reducing cost.

A small plating cell was incorporated into the prototype facility at CDC and a controlled set of experiments conducted. Experiments were thorough and challenging since no one in the industry could approach the lofty objectives of the ETA Systems Supercomputer CPU board nor the lofty claims of the inventor. The results were simply outstanding. From the results and a commitment to fabricate a larger manufacturing line of plating insert cells, the 45 layer 15” x 24” CPU board became a realistic finalized goal of ETA Systems. Anyone told of this goal openly scoffed at this as too risky and unrealistic. This included some in the company as well.

Later, when manufacturing of the systems was viable, a production capacity was developed for manufacturing. It is noted that hundreds of these boards were fabricated from a period of 1987 through early 1989. The yield of final boards was nearly perfect – only one finished board was scrapped.

To this day (2009) few realize what a monumental accomplishment this was and still is. This a tribute to LeRoy Beckman, Peter Pellegrino, the manufacturing facility at ETA Systems (now a banking building in St. Paul) and those who trusted that the lofty objectives could be realized.

To accommodate routing and designing for minimum distance between IC chips, CAD tools were developed and the first use of diagonal routed layers were introduced. Prior to this only x–y layers were permitted with manual and/or auto tools (CAD). This enhancement permitted timing constraints to be realized between chips.

The final board had the following noteworthy characteristics:

- Board size: 15 inches by 22 inches by 0.26 inches - Pth hole ratio ≈ 20:1 – plating time – less than 20 minutes - 45 total layers per CPU panel - 150 IC chip locations (fewer were used in final design) - More than 30,000 board plated thru holes (pth) were used for interconnect

In 2009 this board development and manufacturing stands out as one of the major technology developments by ETA Systems

Packaging

The key challenge for packaging the ETA Supercomputer processing unit was the cryogenic chamber for the processor. The Cryostat to contain the processor (two processor units) had a conventional (and quite heavy) circular cryostat containing a vacuum chamber between the outside environment and the inner environment. Input of liquid Nitrogen was at the bottom of the chamber and the escaping of the gaseous Nitrogen was provided for near the top of the unit. The piping containing the Nitrogen to and from the regeneration unit was also temperature protected with vacuum lines. Dan Sullivan and his design team led this admirable effort. (Unfortunately, Dan passed on a few years ago). It was felt that a less heavy and equally efficient chamber (proposed by Carl Breske – a very innovative scientist) could be designed if time permitted but the selection of the vacuum based design was conservative to accommodate schedule and also to familiarize the team with the challenges of Cryogenics. The compressor unit was a conventional Liquid Nitrogen system (very large and bulky) used for generation of Liquid Nitrogen for the commercial market. The system was not pretty. Marketing, led by Bobby Robertson (also now deceased), prohibited the engineers to show this to perspective customers fearful that this would scare them away.

Thought was given to actually eliminate the need to regenerate the system in a closed system but rather purchase Liquid Nitrogen – readily available in tanks - and have them periodically refilled as is done in the IC and other industries using Liquid Nitrogen. This was discarded for the initial design since several customer sites did not easily accommodate the external access to Liquid Nitrogen tanks. It was to be an option for future systems and those customers that easily accommodated such an option.

The final design was then a closed recycled Liquid Nitrogen system with the compressor located remote, much like Freon compressors, which many Supercomputer customers were already accommodating.

The design challenge was at the surface (looked much like a two slice toaster) where the processing boards were inserted. This seal had to accommodate the connecting transmission to the external and room temperature memory and I/O subsystems. A printed circuit board was designed to connect the processor to the outside world. Heaters were applied to the surface to prevent icing at the cryostat surface. The separation, only a few short inches had memory operating at 300 degrees Kelvin and the CPU operating at 77 Degrees Kelvin. There were a few “frosty” events in this development cycle!

The third challenge was to provide reliable soldering of the circuitry to the board amidst the severe temperature difference that the solder joints would be subjected to (greater than 250 degrees) during the cool down and warm up cycles. Studies at the National Bureau of Standards provided input that the temperature cycle should be profiled in a precise sequence as the board was cooled and heated. In addition, care as not to remove the board and to care for condensation that would occur if the board had not been heated to room temperature was considered. The result was a 20-minute cycle to remove or insert the board was designed with a specifically prescribed sequence of temperature lowering and rising for both cycles.

At the time of the unfortunate termination of ETA Systems, a more refined, lower cost and lower weight design as stated earlier was on the drawing boards. Although the cryostat and associated cooling was costly, an analysis clearly showed that for the performance resulting from the design, the cost was less than any Bipolar IC system designed at the time. Once the connector was finalized and the process and assembly designed, the system operated flawlessly.

Checkout on the manufacturing floor of the system utilized the “Self-Test” capability exhaustively so specific interconnect flaws were clearly understood prior to removing a CPU from the cryostat, thus reducing checkout time considerably as well. These designs were well done, significant and challenged laws of thermodynamics and physics to new limits.

Air Cooled System

As stated earlier in the document, an Air-cooled processor would operate considerably slower (2x slower) when operated in normal or “room temperature” environments. ETA Systems by sorting the devices for performance at incoming inspection, allowed for a three times performance differential to be realized. Only the highest performance devices were reserved for the Cryogenic cooled system. The remaining parts were then re-sorted into two categories for room temperature; the differential would be a 4-nanosecond clock period between the two room temperature systems and 17 nanoseconds (24 to 7) for the total system product set. The sorting and using the entire distribution of Integrated Circuits had a significant cost reduction factor for the entire product line. Bipolar devices, by contrast had lower functional yield to begin with coupled with additional loss of product due to performance yield. This was a definite cost reduction asset to the ETA System.

To cool the CPU air was forced on to the processor chips by using a plenum that was designed to cover each chip. Holes were designed in the plenum such that equal operating temperature would result for each operating chip. Since the power consumption variation significant for several part types, designing the appropriate number of holes above each chip location could provide custom cooling. The plenum could then be molded for mass production of the processing unit. Large volume cooling fans were designed for the system as well. Cost was the focus for the air-cooled systems since the price tag was below $1M. Recall, that the air-cooled design was identical in parts at the CPU and storage level. A single development was achieved for a wide range of products with one design team.

Storage

Stacks using three-dimensional characteristics were designed under the leadership of Brent Doyle for the memory – both static (high performance) and dynamic (high density and lower performance) memories of the ETA Systems Supercomputer. These unique designs provided for highest density and optimum performance of the standard memory devices used. Ability to upgrade to future generations of memory (more storage capacity Integrated Circuits) was built into the design as well. The design worked well and stacking became commonplace in the computer industry for future designs – eventually eliminating the chip package entirely.

The Air-cooled system was defined as a Piper.

Summary

The design of the ETA Systems Supercomputer hardware had many unique features. The brief pages highlight some of them.

It would be remiss not to briefly discuss the “team” concept used to design the hardware. By having the CAD, Packaging, memory, circuit and power expertise located in a close proximity and holding concise project reviews at all levels at periodic and timely phases, all were kept abreast of the progress and challenges of each other. This permitted changes to be made to necessary designs to properly accommodate the challenges and opportunities in a timely fashion. Hardware was demonstrated on or near schedule despite the innovations required in each aspect of the design. The team was truly a “team”.

A missing link to the team was the logic design. These folks were separate and actually on another floor of the ETA Systems facility. It was strongly suggested and accepted for future designs, that the logic team would be a part of this common organization. I had the opportunity to lead one additional hardware development that included the logic design team (at Cray Research, not ETA Systems) later. It was a smoother and more effective and thorough team. Like ETA Systems the communications were open and included both manufacturing and software participation (the later two were voluntary).

Clearly, communications – effective communications at all levels of the organization was key to this hardware design success.